Snowflake BUILD 2025 Recap

Jeff SkoldbergFriday, November 21, 2025

Snowflake BUILD 2025 Recap: Key Announcements

Snowflake BUILD 2025 brought a wave of new features and partnerships focused on AI, data integration, and developer productivity. Here's a quick rundown of the major announcements.

Data Foundation - Integration and Connectivity

Building AI products starts with building a clean data foundation.

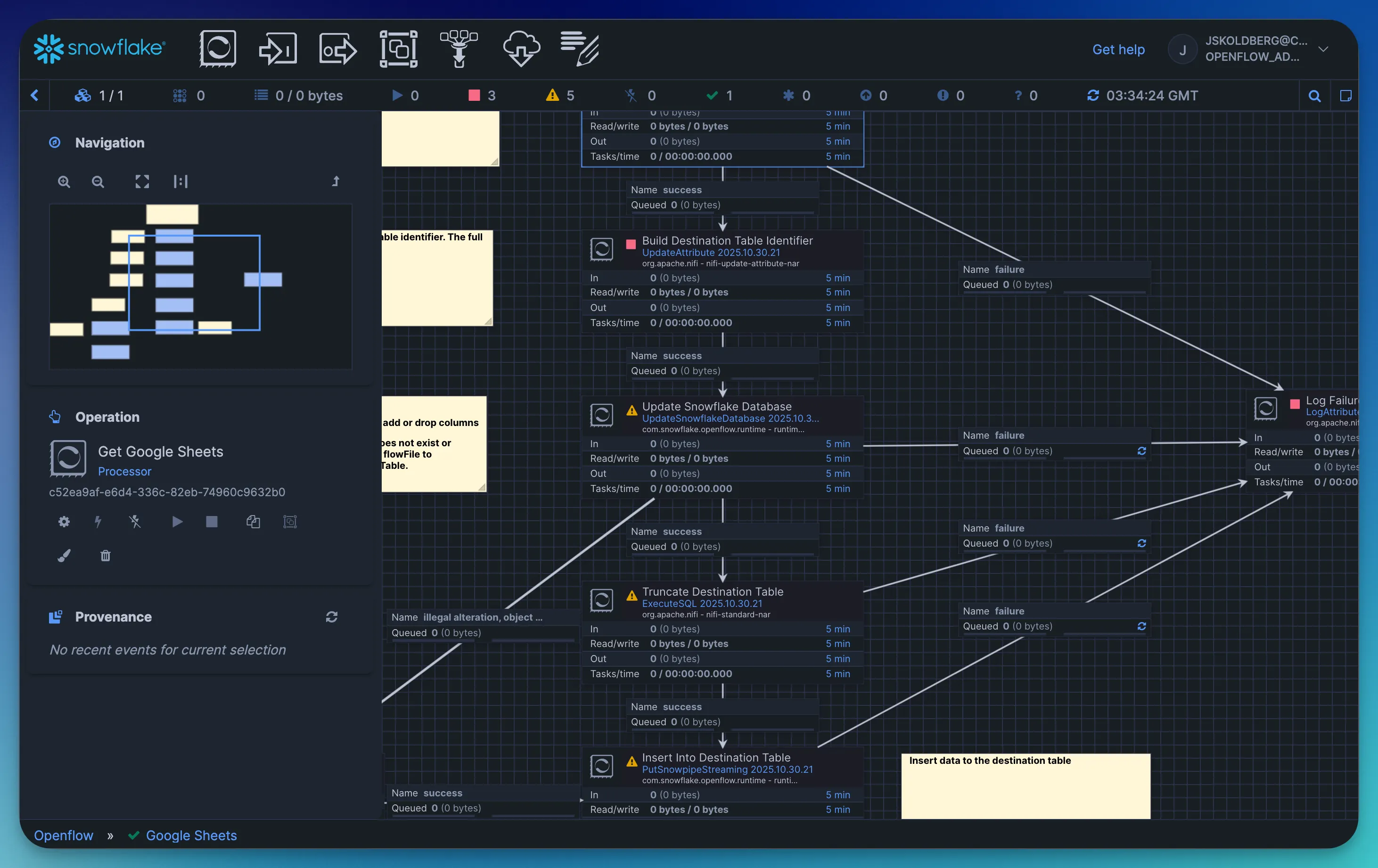

Openflow Snowflake Deployment (GA)

An open, extensible, managed, multi-modal data integration service supporting structured and unstructured data, batch and streaming. Now GA on both AWS and Azure for Snowflake-managed deployment, making data movement effortless. Previously this was only available as “Bring your own Cloud”, where openflow was deployed in AWS then connected to Snowflake. The Snowflake deployment makes the creation and management effortless. I tried this over the weekend and my experience was the setup was totally easy but using the product has a bit of a learning curve.

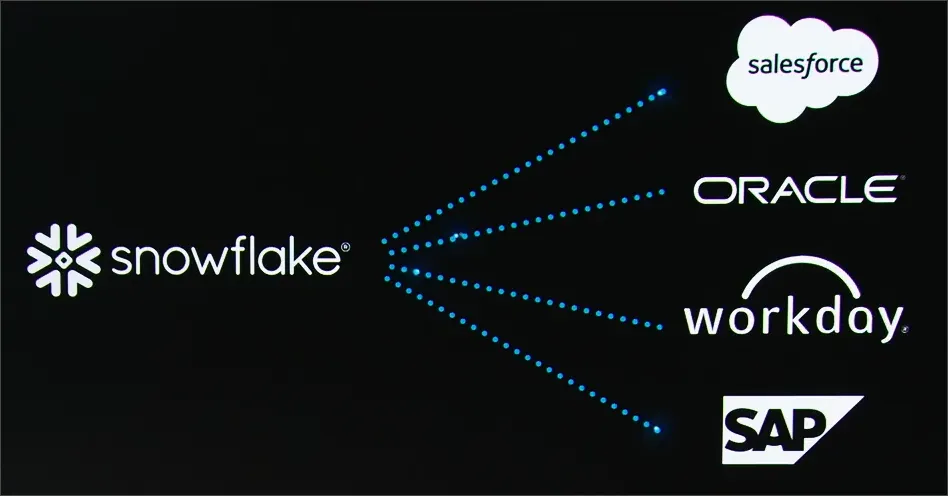

Snowflake AI Data Cloud meets SAP Business Data Cloud

Snowflake announced bi-directional zero-copy integration between SAP Business Data Cloud and Snowflake AI Data Cloud. This feature provides simplified access to curated, business-ready SAP data with preserved business context for faster AI and analytics. SAP BDC harmonizes data between SAP apps. SAP Customers who are not currently Snowflake customers will gain access to SAP Snowflake, and Snowflake product embedded in SAP BDC.

Snowflake now has zero copy integrations with Workday (in development), Salesforce, Oracle (Public Preview soon), and SAP.

Snowflake AI Announcements

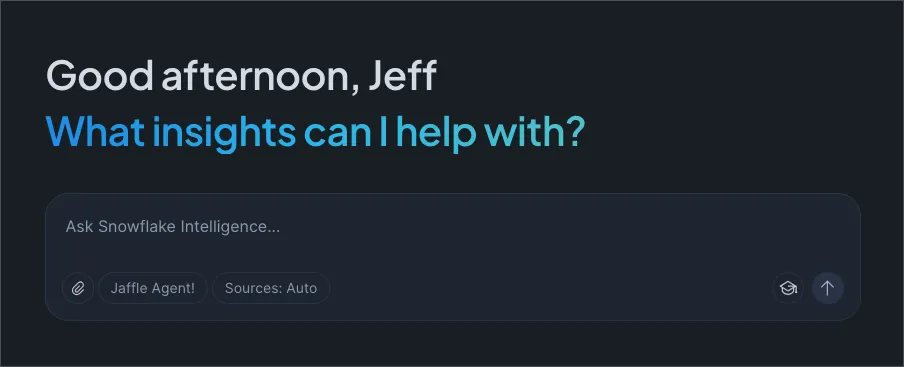

Snowflake Intelligence (GA)

Snowflake Intelligence is being called “the future of work”, as it is Snowflake’s chatGPT-like interface for non-technical business users. We’ve been talking a lot about this at SELECT; the feature is now GA in all Snowflake clouds.

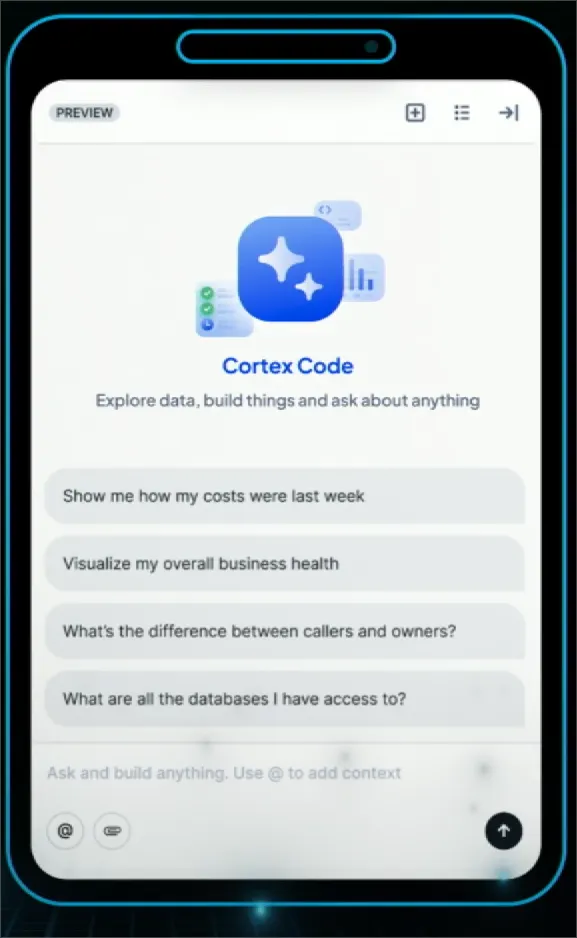

Cortex Code (Private Preview)

Cortex Code is an AI assistant for coding and investigating your Snowflake infrastructure, directly integrated in Snowsight. It helps with administrative tasks, security and governance, and provides an AI coding assistant using natural language.

Cortex Agents API (GA)

Snowflake's managed AI agents retrieve and analyze both structured and unstructured data using robust reasoning models. They deliver accurate insights through a convenient REST API with unified security and governance.

Cortex Knowledge Extensions (GA)

Snowflake CKEs integrate third-party unstructured data into agentic systems through secure sharing of documents indexed through Cortex Search Service. Access licensed content sources like news and research in near real-time while keeping prompts within your account. This feature moved from Public Preview to GA.

Snowflake Managed MCP Server (GA)

The Snowflake managed MCP enables all of your Snowflake investments to be managed by your AI tool of choice through the Model Context Protocol. This enables AI tools like Claude, ChatGPT, or other LLM-powered applications to securely access and interact with your Snowflake data and infrastructure. By implementing the open MCP standard, Snowflake enables your AI assistant of choice to query data, understand your schema, execute SQL, and leverage all your Snowflake investments without building custom integrations.

More info about creating the MCP Server inside your snowflake account can be found here.

Online Feature Store for ML (Public Preview)

Low-latency serving (<50ms P90) of ML features for online inference use cases like fraud detection and real-time recommendations. Features automated consistency with offline feature pipelines and high availability without infrastructure management.

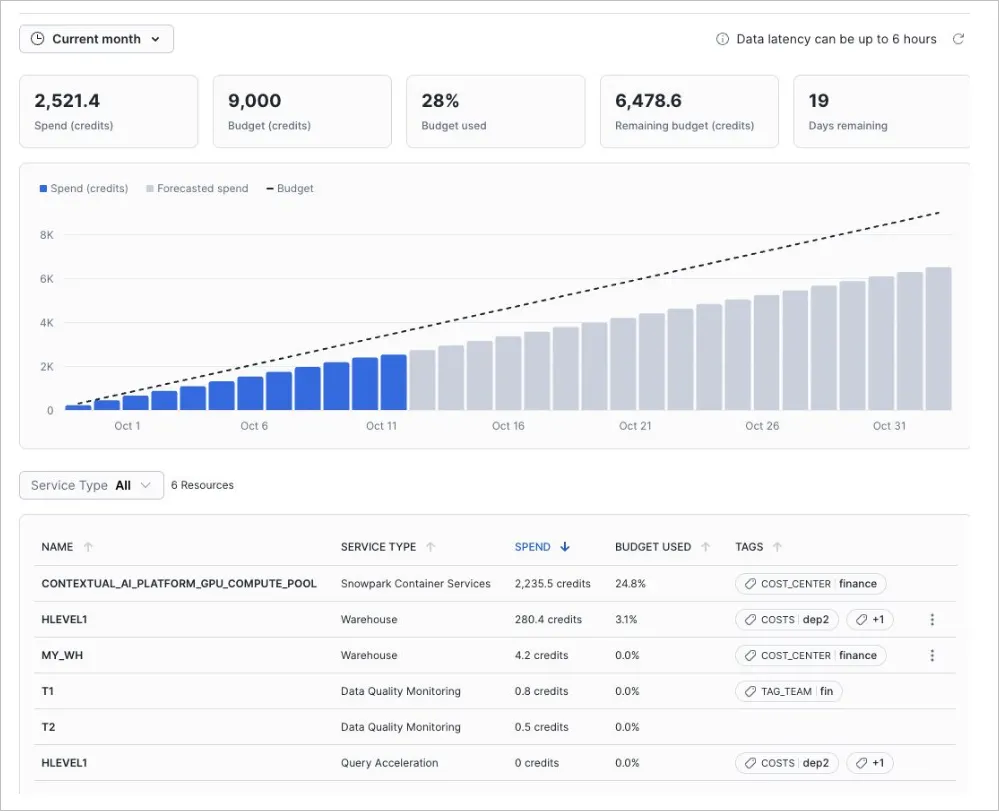

Cost Governance Controls for AISQL (GA Soon)

A custom tagging framework that allows admins to track, manage, and automatically enforce AI spending budgets. Set up notifications or custom actions triggered when spending exceeds specific thresholds.

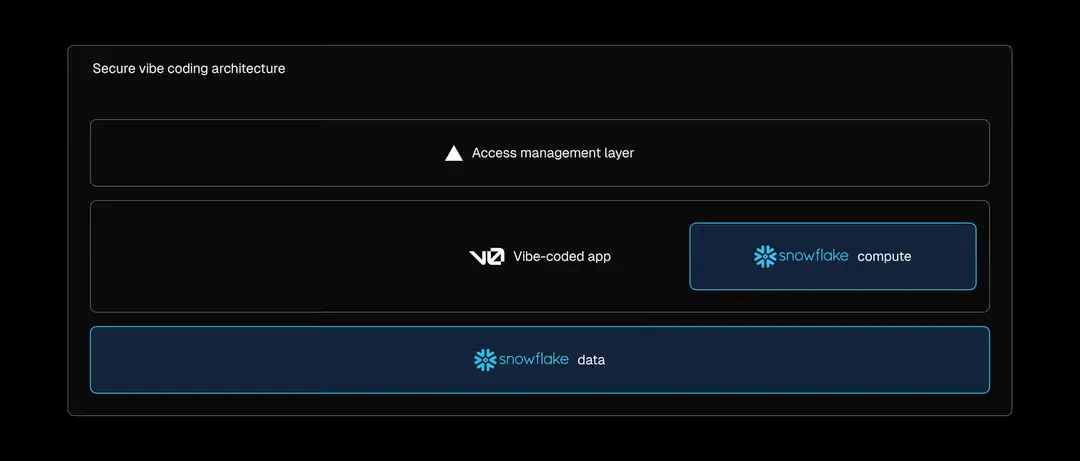

Snowflake Vercel Partnership

Snowflake announced an integration with Vecel’s AI powered development tool called v0., This enables users to build and deploy data-driven Next.js applications to Snowpark Container Services using natural language. The split architecture keeps compute and data within Snowflake while Vercel manages application and authentication layers, automatically inheriting existing Snowflake security policies. Users can chat with v0 to query data and generate complete applications with API routes that deploy with a single click. The integration is currently in waitlist phase.

From what I gather, this will make it easy to create beautiful, modern AI applications deployed in Snowflake.

Data Platform & Infrastructure

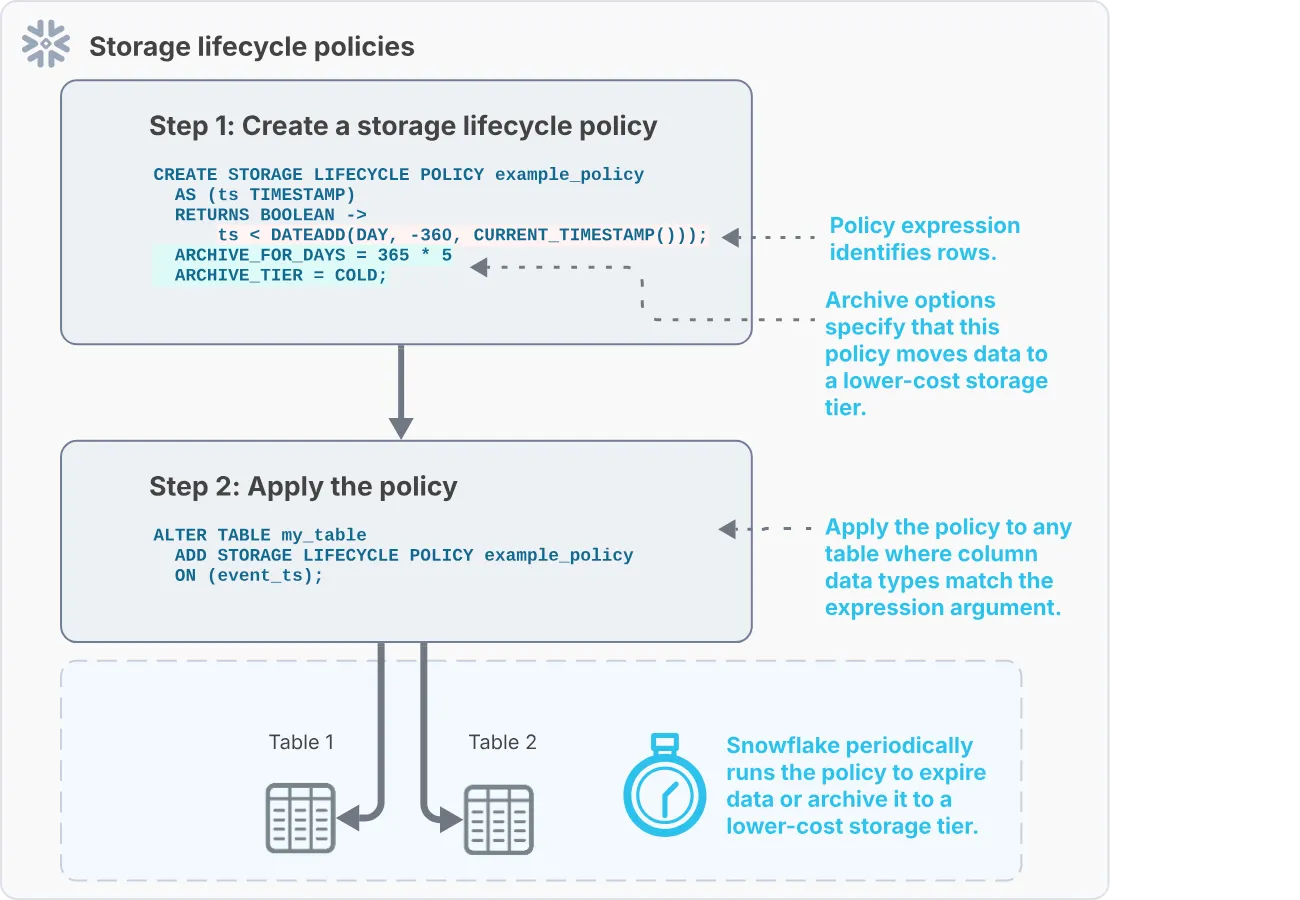

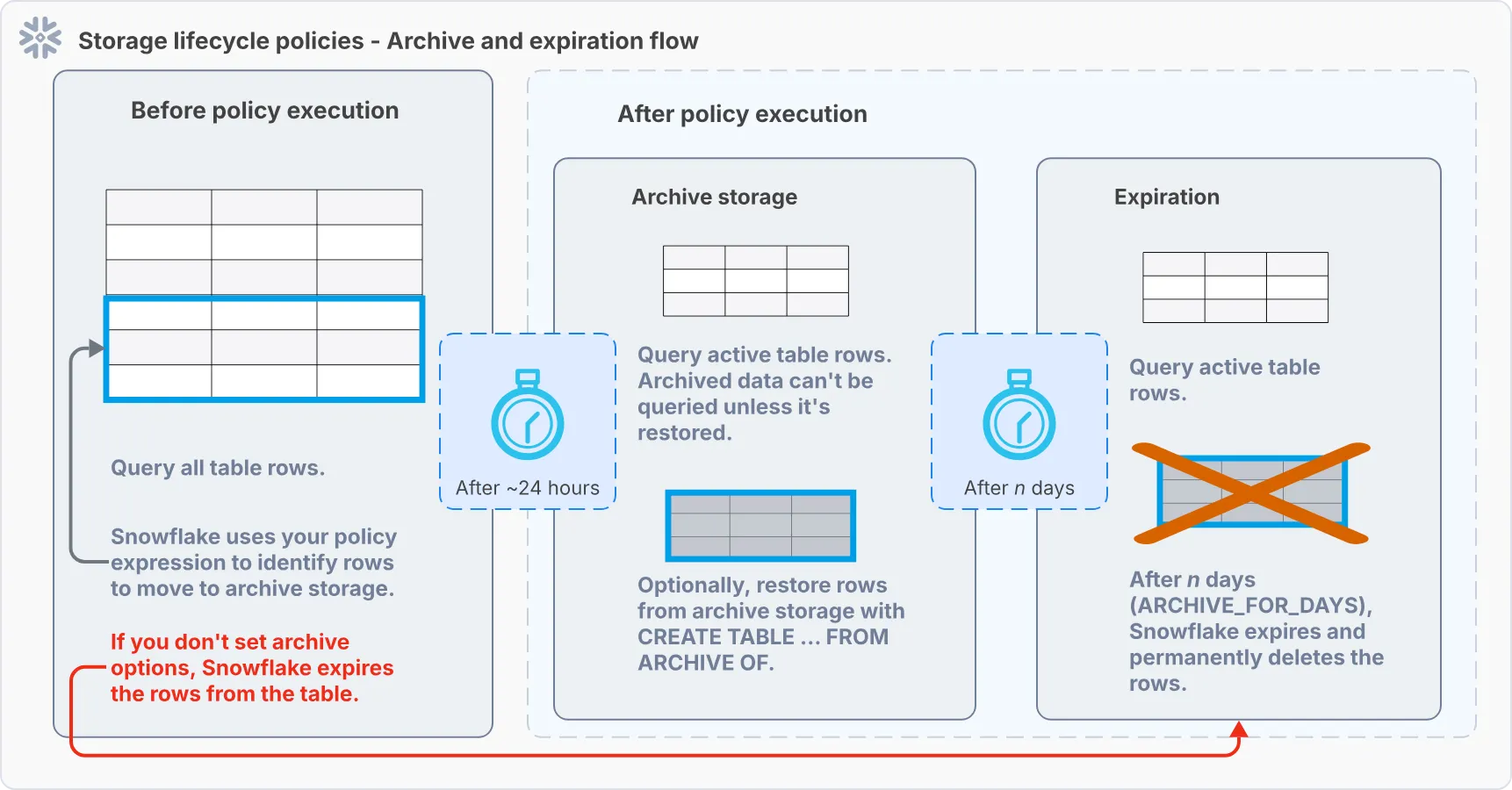

Storage Lifecycles Policies

A new feature that every data engineer should get familiar with, Storage Lifecycle Policies allow you to automatically move data to COOL or COLD storage, or delete a row, based on the policy you set and the age of the record. This replaces the need for stored procedures to implement data retention policy and gives the added benefit of lower cost storage tiers. A picture is worth a thousand words, so please review these images found in the Snowflake docs:

Let’s review all of the cost elements of Snowflake Storage Lifecycle Policies:

Storage:

- Cool storage costs $4 per TB/mo in AWS and the data is readily available for querying. Once you move data here, you must keep if for 90 days or they will charge you a minimum storage duration fee.

- Cold storage costs $1 per TB/mo. Cold tier data retrieval can take up to 48 hours. Minimum archival duration is 180 days with a fee for dropping data early.

Policy execution cost / archival cost:

- The policies will run once every 24 hours using Snowflake-managed Serverless Compute to move your data. This serverless compute has a credit multiplier of 50%, meaning it costs half of a traditional warehouse. Snowflake determines the compute size needed.

- You are also charged .05 credits per 1000 files archived.

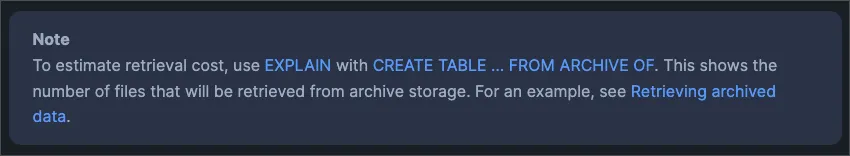

Data retrieval cost:

- You are charged .05 credits per 1000 files retrieved.

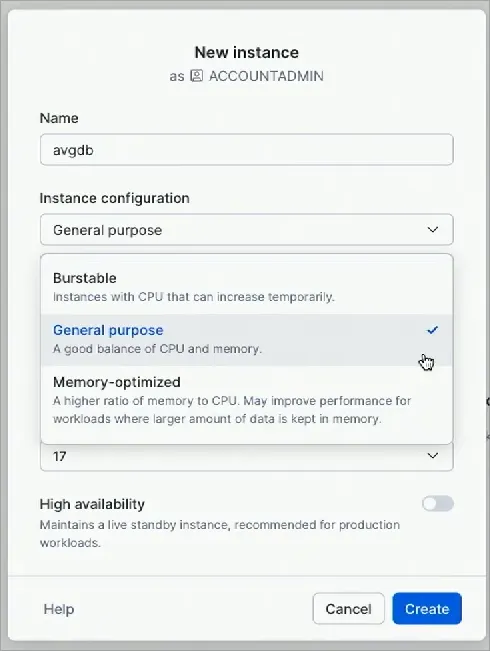

Snowflake Postgres (Public Preview Soon, limited regions)

A fully-managed PostgreSQL service on the Snowflake AI Data Cloud designed for fast, high-volume transactional workloads (OLTP). With this service you can get up and running in minutes and have low management / admin overhead. This includes popular Postgres extensions out of the box, such as PG Vector and PGIS.

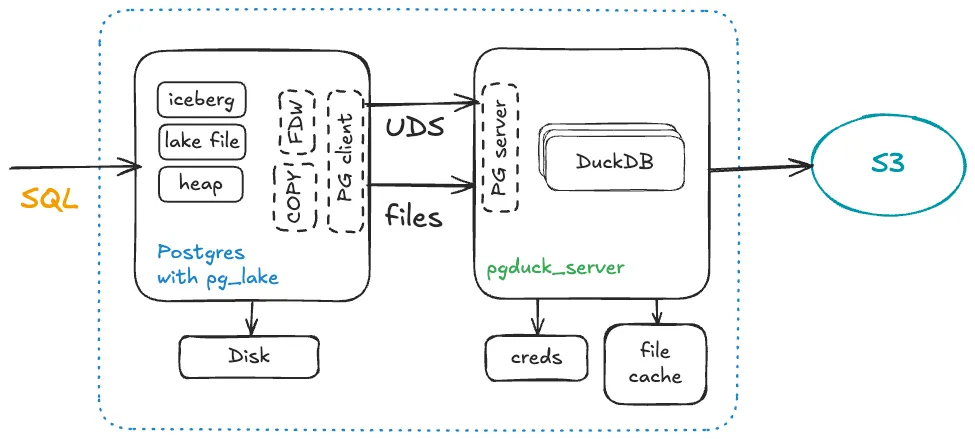

pg_lake (GA)

“pg_lake” is a set of open source PostgreSQL extensions that enables PostgreSQL to manage your Iceberg catalog. Ducklake was the first to do this and it makes sense that we should have a relational database to manage the Iceberg catalog. This should make exploring versions and time travel easier, as well as provide an performance boost of the Iceberg metadata layer. For more info, check out the GitHub repo.

Hybrid Tables on Azure (GA)

Hybrid tables, which combine transactional and analytical capabilities, are now generally available on Azure.

Horizon Catalog: Writes to Any Iceberg (GA)

Apache Polaris is now embedded in Horizon Catalog, enabling writes to any Iceberg table with the same tagging, governance, and lineage capabilities.

Business Continuity and Disaster Recovery for Snowflake Managed Iceberg Tables (GA)

BDCR is now available for Snowflake-managed Iceberg tables.

Interactive Tables & Warehouses (GA Soon)

Interactive Tables are optimized for real-time streaming ingestion and querying. They leverage Interactive Warehouses which are always up with pre-warmed caches. These are designed for sub-second analytics requiring low-latency and high concurrency.

Developer Experience

Workspaces (GA)

Workspaces are now GA! In case you have not heard, workspaces are a centralized, file-based development environment inside Snowsight for unified code editing and version control. Workspaces connect with Git for version control and collaboration and can also run dbt. Snowflake also announced Shared Workspaces: you can now share your workspace with a team.

Enhanced Git Integration (GA)

Snowsight now supports self-hosted repositories with major improvements to Git integration. A GitHub Actions app is available in the Marketplace to streamline CI/CD integration.

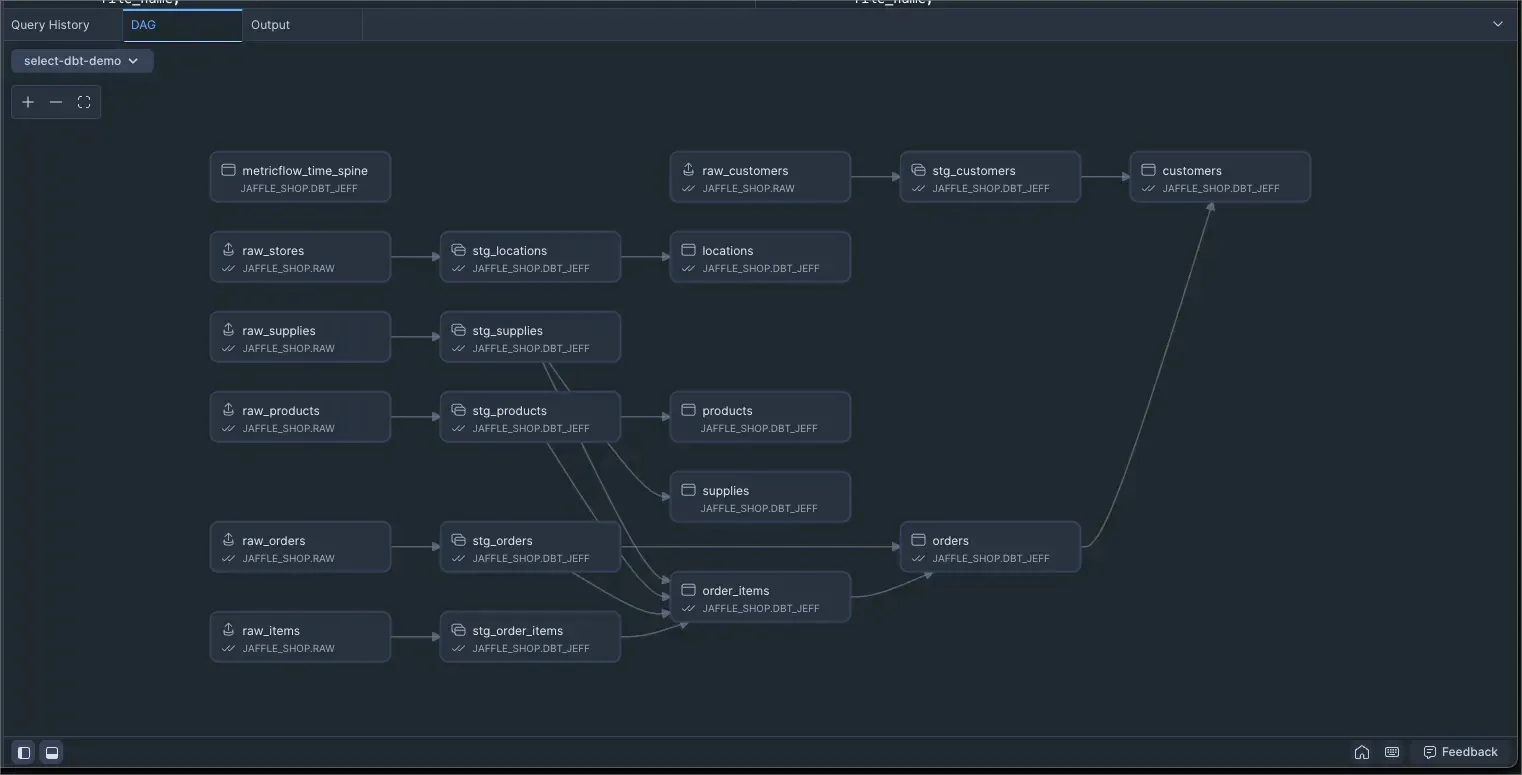

dbt Projects on Snowflake (GA)

Build, test, deploy, and monitor dbt data transformation projects directly in Snowflake. No need to manage dbt Core infrastructure. Now you can import existing projects or create new ones in Snowsight Workspaces. This has been in Public Preview since Snowflake Summit in June and is now GA.

Snowpark Connect for Apache Spark™ (GA)

Run existing Apache Spark (DataFrame, SQL) code directly on Snowflake with minimal migration. Snowpark Connect eliminates Spark cluster management and egress fees while delivering 5.6x faster performance and 41% TCO savings on average. This has been in Preview since our last “What’s New in Snowflake” recap, but now Snowflake has moved this to GA.

Snowflake Optima (GA)

A workload optimization engine that automatically and continuously learns from your usage patterns. Handles indexing and metadata collection, removing the need for manual tuning to improve query performance.

Data Sharing & Collaboration

Sharing of Semantic Views (GA)

Data providers can now share semantic views along with structured data, enabling natural language querying. Developers can easily integrate shared data in AI apps and agentic systems without additional preprocessing.

Open Table Format Sharing (GA)

Share data stored in open table formats like Iceberg across the Snowflake platform.

Wrap up

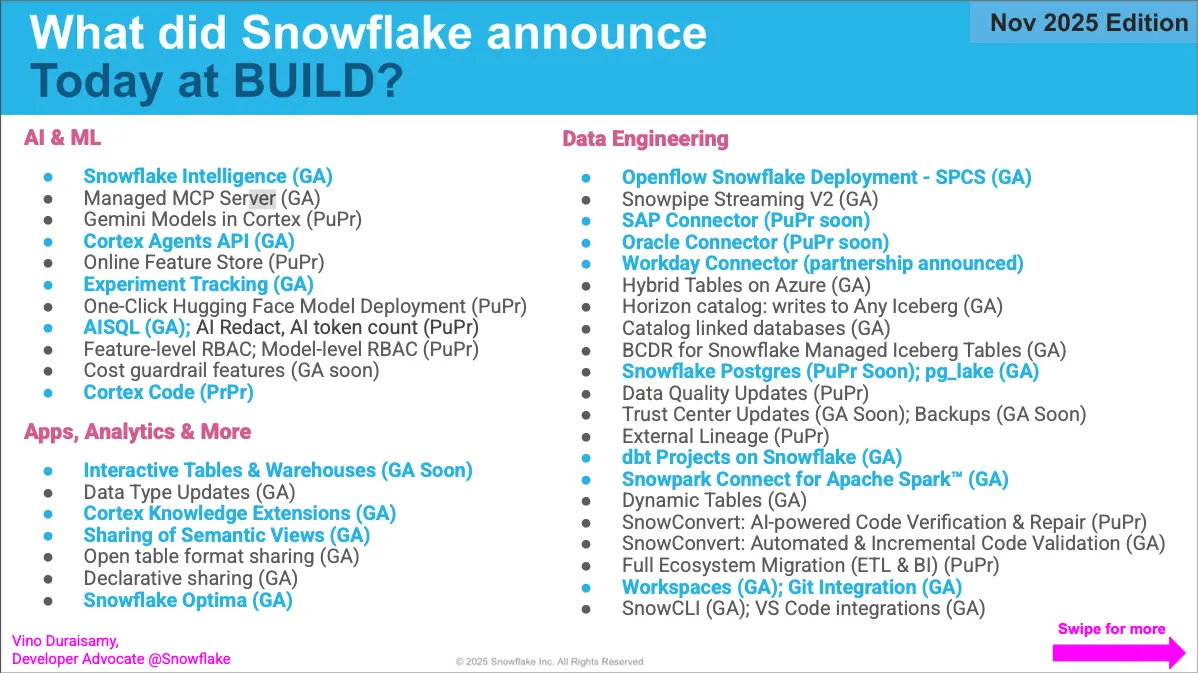

This article covers the highlights from Snowflake Build 2025. I want to thank Vino Duraisamy for publishing this great summary slide on LinkedIn which hits on a few more announcements.

Jeff Skoldberg is a Sales Engineer at SELECT, helping customers get maximum value out of the SELECT app to reduce their Snowflake spend. Prior to joining SELECT, Jeff was a Data and Analytics Consultant with 15+ years experience in automating insights and using data to control business processes. From a technology standpoint, he specializes in Snowflake + dbt + Tableau. From a business topic standpoint, he has experience in Public Utility, Clinical Trials, Publishing, CPG, and Manufacturing.

Want to hear about our latest Snowflake learnings?Subscribe to get notified.

Get up and running in less than 15 minutes

Connect your Snowflake account and instantly understand your savings potential.