What's New in Snowflake: June 2025

Jeff SkoldbergMonday, July 14, 2025

Welcome to our June 2025 edition of Last Month in Snowflake, where we will cover the most impactful announcements from the past month. June was an announcement heavy month due to Snowflake Summit. In this post, we will not repeat the 30+ announcements we cataloged in our Summit 2025 Recap post. Instead, we will call out the most impactful announcements from June 2025 for the average Snowflake user (with some overlap on Summit announcements). Let’s go!

Snowsight Updates

Here are the updates to Snowsight we’re most excited about!

Workspaces — Public Preview

One of the most exciting announcements from Summit was a new feature called Workspaces, a modern file based IDE in Snowsight that integrates with Git (GitHub, GitLab, etc). It brings IDE-style functionality like folders, Git integration, AI-powered code suggestions, and native support for dbt to the Snowflake UI. Workspaces allow users to organize code in projects, collaborate more easily, push and pull from GitHub (or other Git providers), and streamline development workflows without leaving the Snowflake platform.

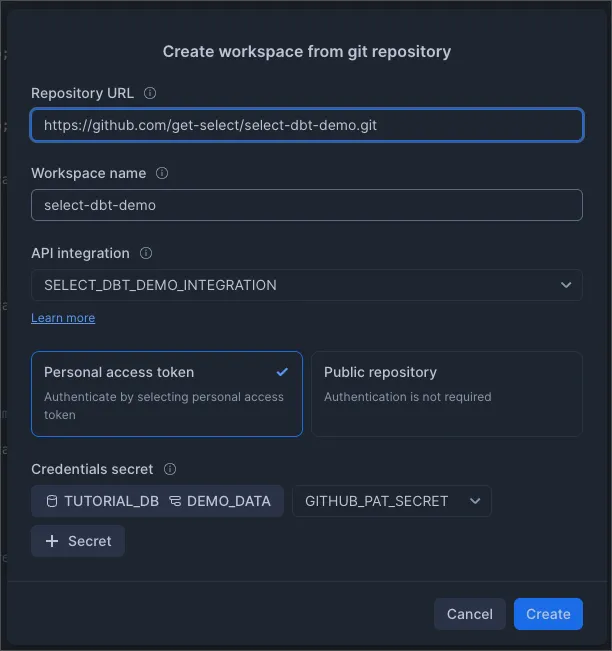

Workspaces are especially powerful when you connect a Git repo. To add a repo, the only prerequisite is an API Integration (and a Secret if the repo is not public); the rest is done via the GUI.

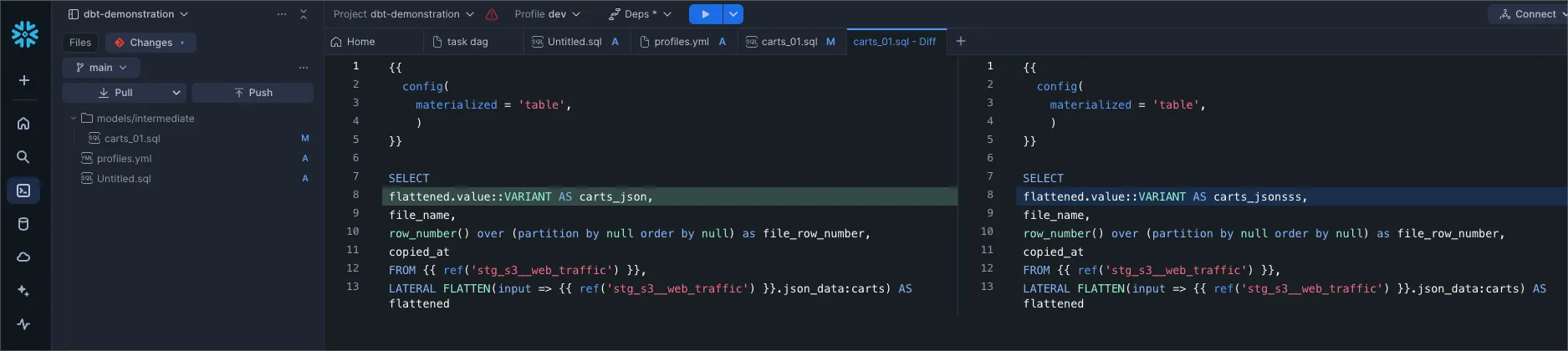

Workspaces have a user friendly Git integration, allowing you to select a branch, push, pull and compare diffs in the UI:

Overall thoughts on Workspaces

Personally, I’ve left Worksheets behind and only use Workspaces now, just for the fact that I can organize files into folders and navigate the file tree more easily than the Worksheets interface. Even when I’m not connected to a Git repo, I still prefer Workspaces due to the improved layout, access to query history, and the inline copilot.

There are too many features in Workspaces to cover here; in the future we’ll create a dedicated post for Workspaces Tips and Tricks (including dbt projects).

dbt Projects in Snowflake — Public Preview

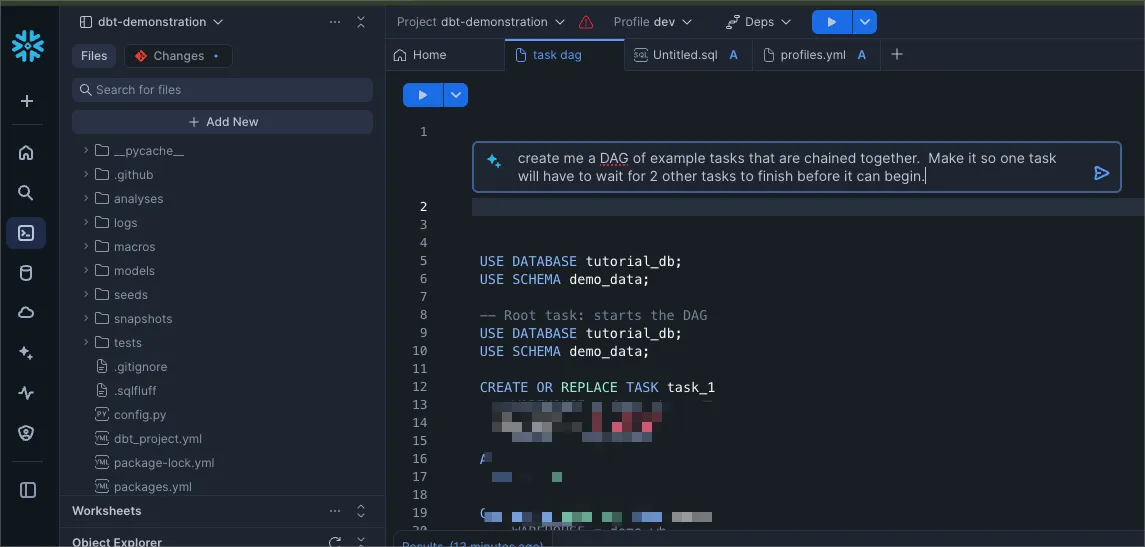

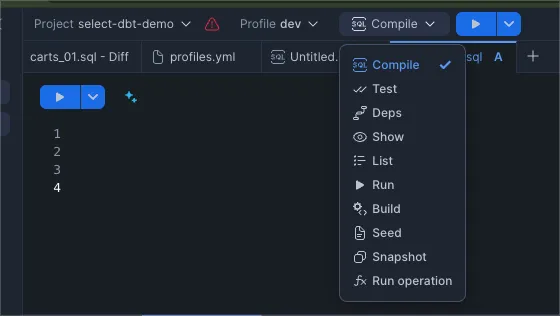

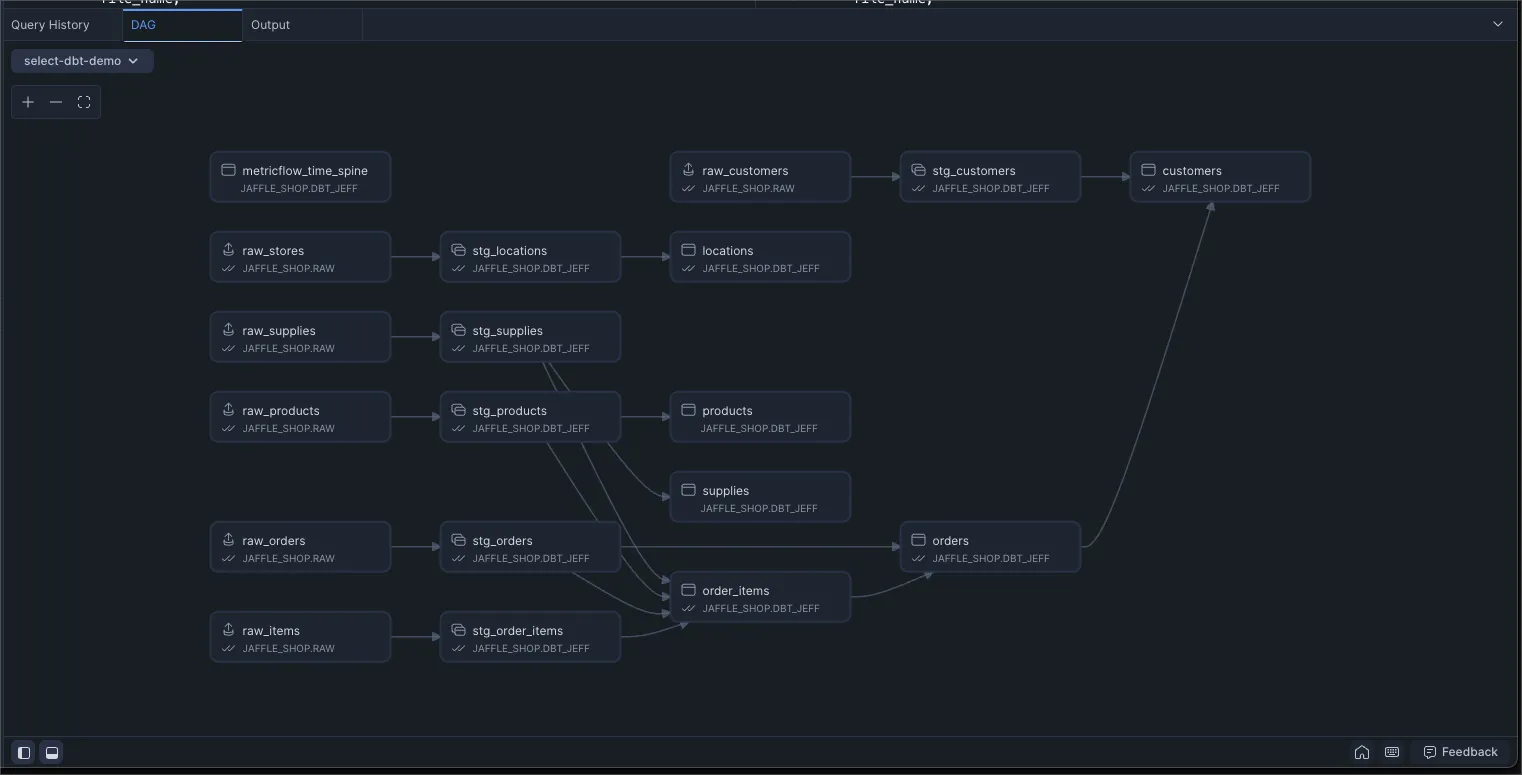

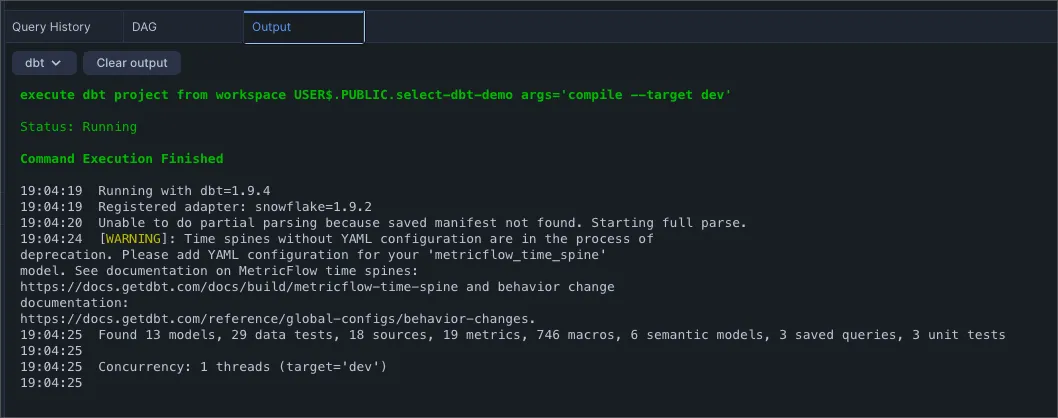

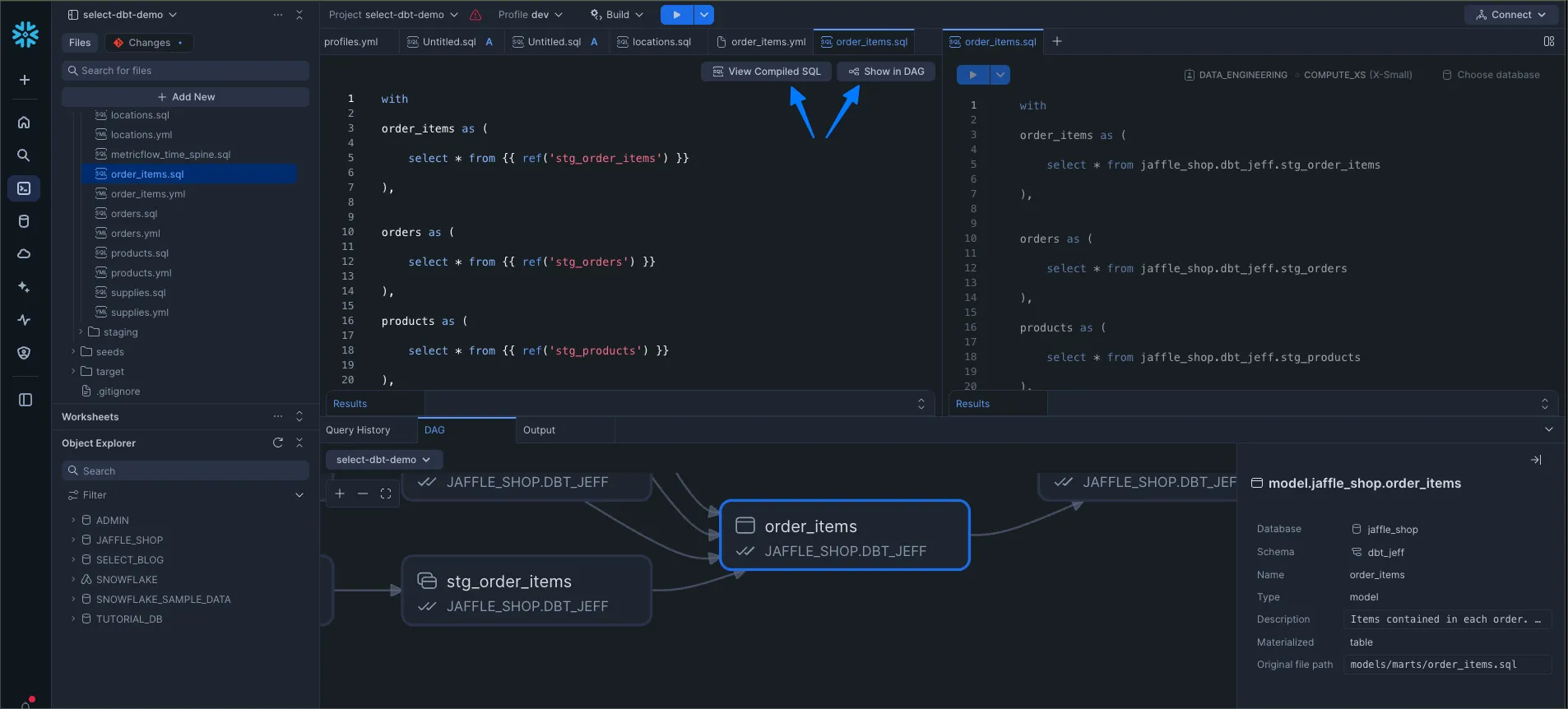

In Workspaces, you can run dbt projects directly in the Snowsight UI. It is easiest to start by connecting an existing repo as shown above. Once you have a Workspace with a dbt repo connected, Snowflake will ask you to create a profiles.yml. Once you’ve done that, you can run dbt commands from the GUI!

In the bottom pane, you can toggle back and forth between Query History, DAG View, and Output.

The query history view links right to the query profile. Note: This feature is not just for dbt projects; all Workspaces will display the query history. But, I find this particularly useful to have the dbt query history included in the IDE.

From any model, you can click the “Compile SQL” button to show the compiled SQL next to the templated SQL. The “Show in DAG” button will highlight that model in the DAG pane below.

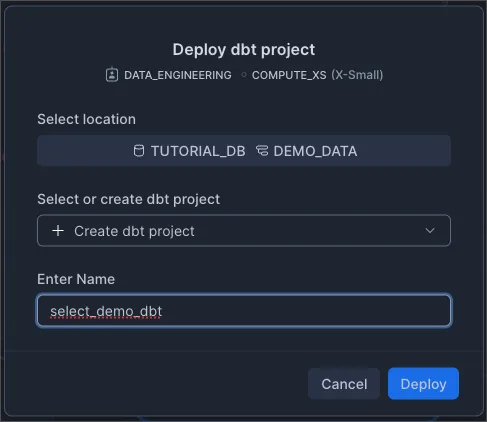

When you are ready to deploy, click “Connect” in the top right, then “Deploy dbt”

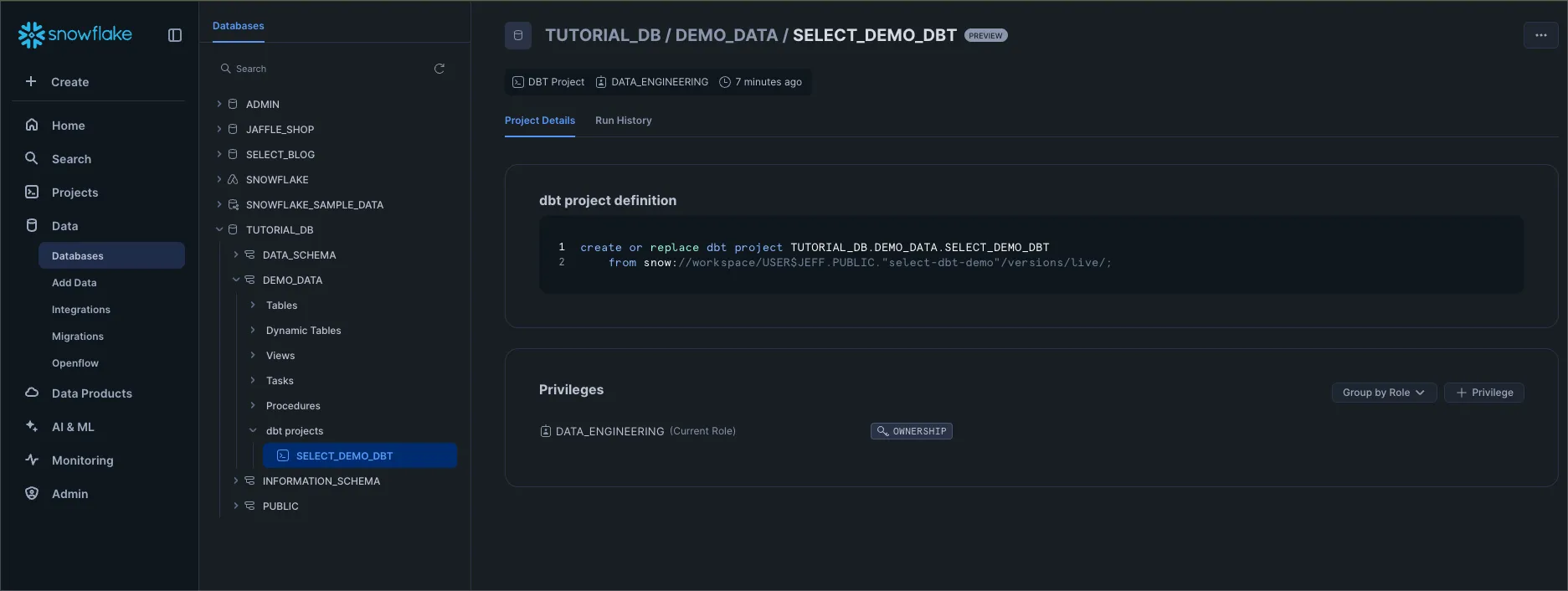

After you deploy, you can see the dbt project under Data → Database / Schema → dbt projects and you can see the run history for all dbt projects under Monitoring → dbt.

Observations and thoughts:

- Pro: I love the idea of being able to create, schedule, and monitor dbt projects from within Snowsight.

- Con: Commands such as

dbt depsanddbt buildseem to have a very long cold start time. - Con: The logs don’t print until the run execution is completed. This makes it hard to know where the time is being spent.

- Con: The fact that every build command needs to be done in the GUI is a little clunky. I miss the ability to run DAG slices in the command line. (The interface to pass arguments to the build command is extremely clunky; needs improvement.)

There is a lot we didn’t cover about running dbt projects here. Stay tuned for a dedicated post on the topic!

Task Overview and Graph at the account level — GA

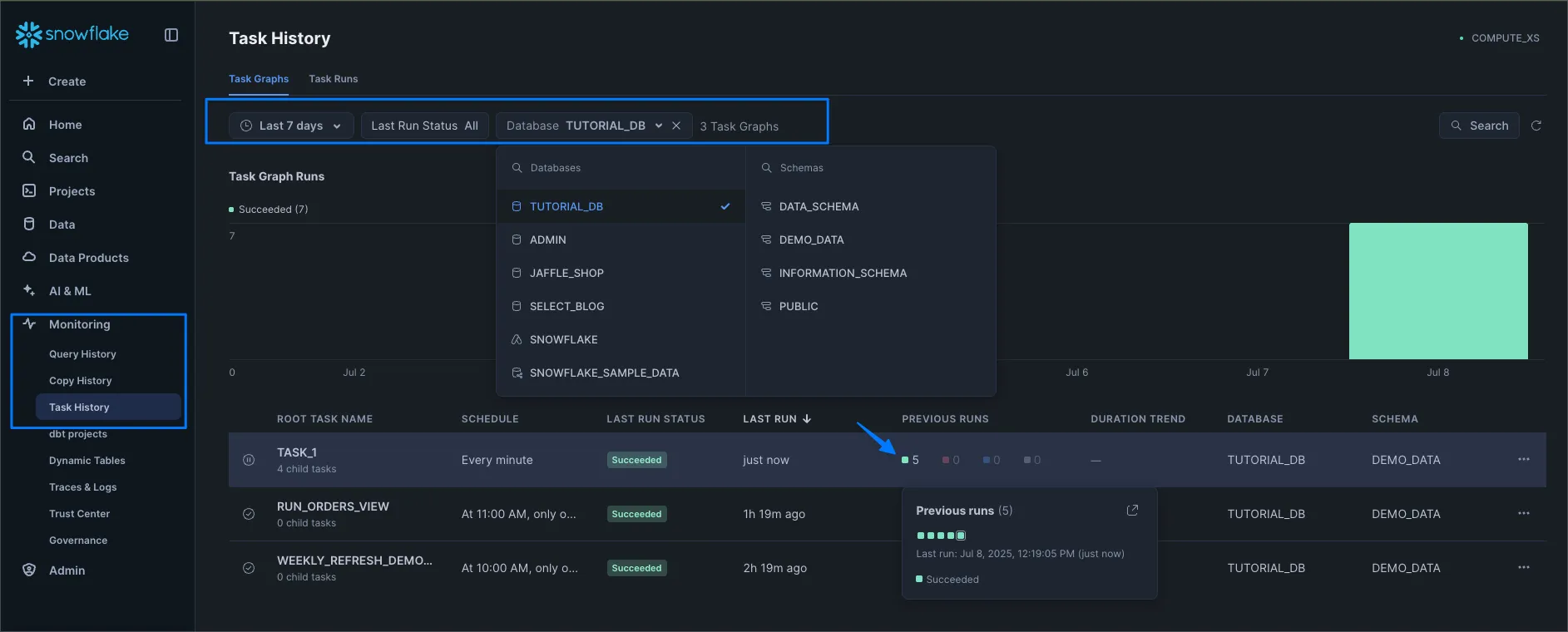

We’ve been able to see a Task Graph at the database level for some time. Now, users can view an overview of all tasks in an account, based on Task Monitoring privileges.

In the left sidebar, click Monitoring, then Task History. Here you can see a filterable list of every task in the account, along with a visual status of the previous runs.

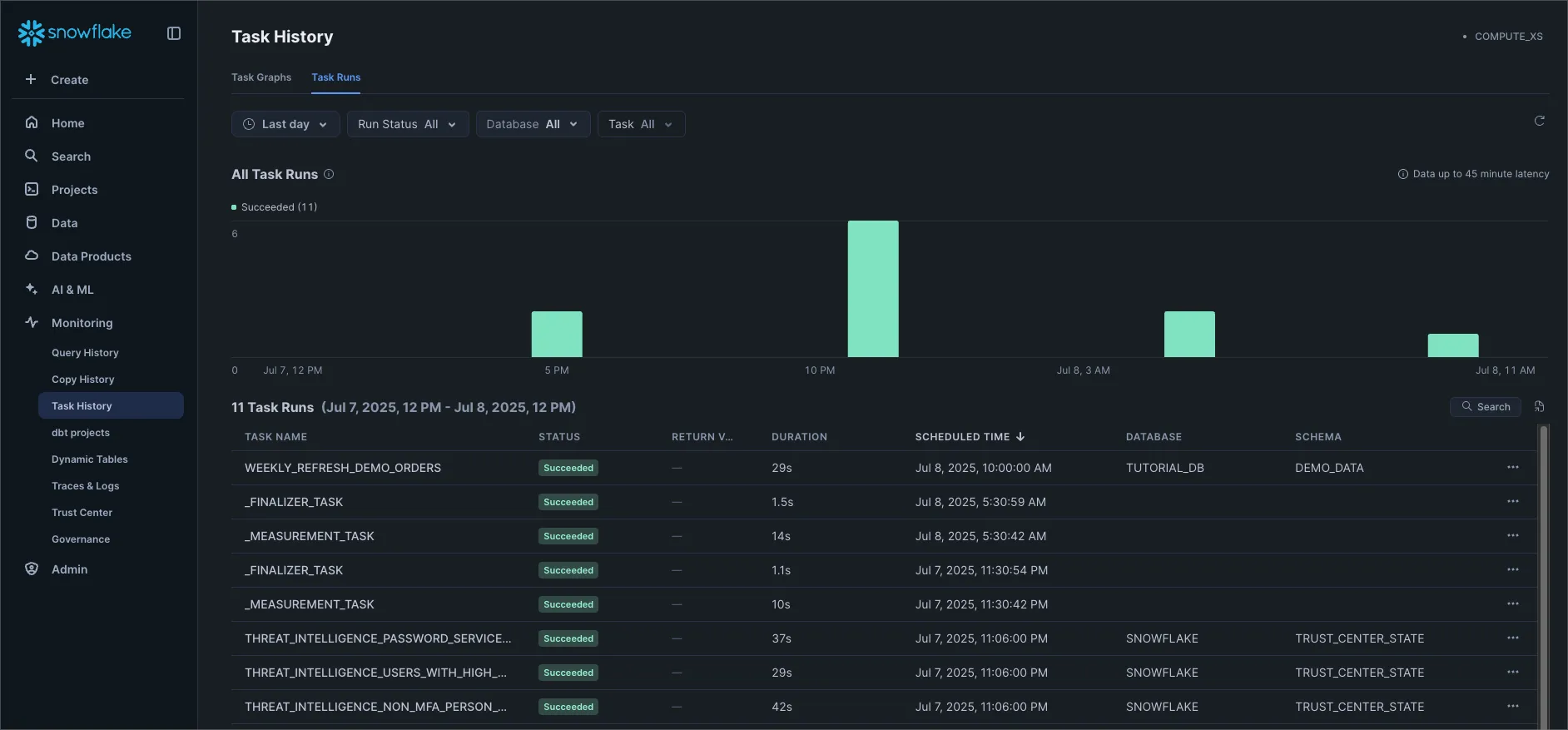

On the Task Runs tab, you can see a list of each task run in the account.

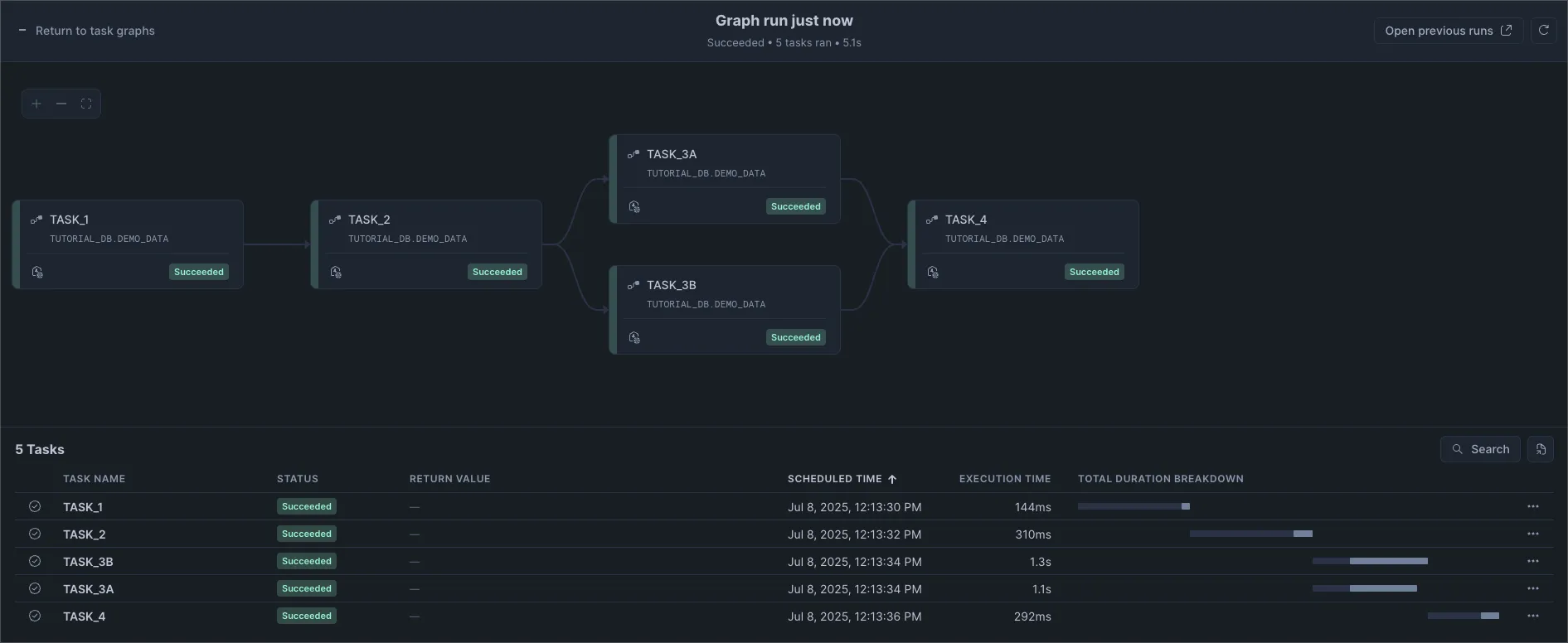

Here you can see the DAG for a completed run along with “Total Duration Breakdown”.

Overall this UI feels really good! I particularly like the Previous Runs tool tip. I find this to be a very useful observability feature.

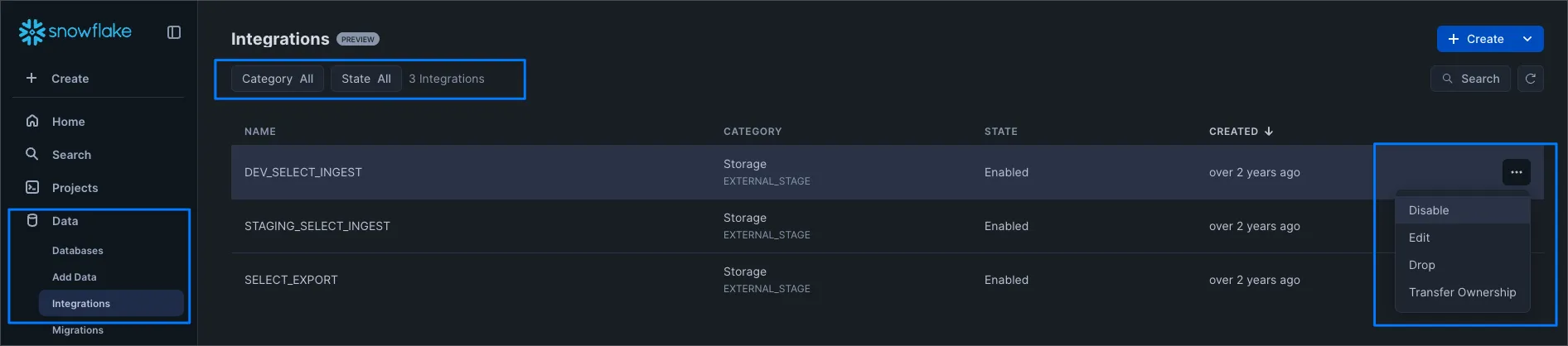

Managing Integrations in Snowsight — GA

In Snowflake, an Integration is used to connect to external resources such as Storage accounts, Git Repos, create Network Rules, API access, etc. Until now, Integrations could only be managed and observed using SQL. Snowflake has added the ability to create, manage, and observe Integrations in the Snowsight UI. This is a big step up in the observability category.

Navigate to Data → Integrations to get an account wide view of Integrations. Use the 3 dots to manage an existing integration.

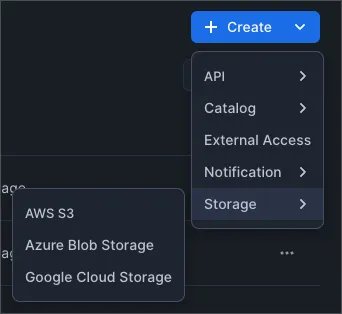

The Create screen allows you to easily create new integrations:

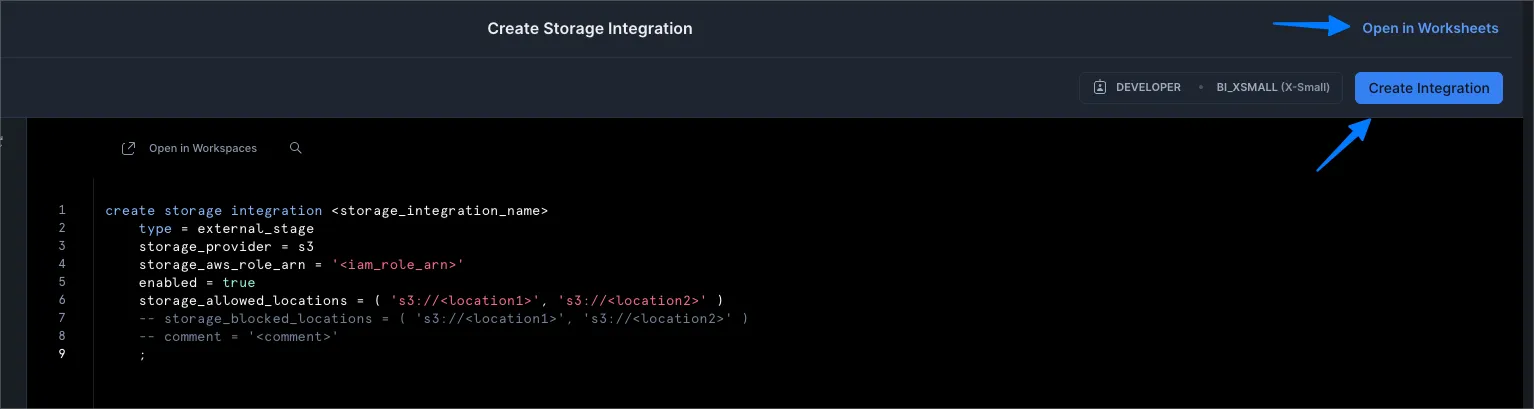

The “create integration” GUI ultimately brings you to a SQL page that looks like a Worksheet, but is more ephemeral, unless you click “Open in Worksheets”.

I wish Snowflake provided a 100% GUI flow for creating Integrations, but I see the challenge since there are so many properties you can have on integrations.

For more info, see the docs.

SQL Updates

During Summit, Snowflake announced several expansions to the SQL dialect for AI related SQL. We won’t be rehashing that here. Instead, we’ll focus on one of the most useful expansions of the SQL dialect: UNION BY NAME.

Union [all] by Name operator — GA

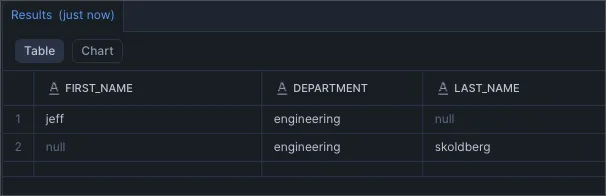

In SQL, union and union all typically require each part of the union has the same number of columns and you’ve lined up the columns in the right order. The new Union by Name syntax will automatically line up columns based on column names. If a column name doesn’t exist or there is a mismatch in the number of columns, Snowflake will handle it by automatically returning null values for the missing column for that part of the union. Here’s an example.

As someone who has used dbt_utils.union_relations macro more times than I can count, I welcome this native Snowflake feature because it works on CTEs in addition to Views and Tables. This feature is a huge win!

Data Engineering Updates

Snowflake offered one significant change in the Data Engineering category (outside of the Summit Announcements) in June.

Artifact Repository — GA

The Artifact Repository refers to the ability to use Python packages from the Python Package Index (PyPI) within Snowpark Python user-defined functions (UDFs) and stored procedures. Unfortunately this feature is not available for Notebooks or Python Worksheets, although the most popular packages have always been available for those.

In order to use this feature, a built-in role called pypi_repository_user must be granted to a user role.

Then you can specify any PyPi package in procedure signature while mentioning the ARTIFACT_REPOSITORY argument. For example:

This is a huge improvement over the previous process, which involved copying Python wheels to Snowflake Stages. Snowflake is making it much easier to use popular libraries!

For more information, see Artifact Repository overview.

Other (non-Summit) Updates:

- Snowflake has increased the size limits for database objects.

- You can now use Nested Stored Procedures, i.e. call a sproc from another sproc.

- Snowflake Streams on top of Iceberg tables including row level deletes. (Standard Streams).

- Malicious IP protection protects all Snowflake accounts by blocking traffic from all known malicious IPs.

- Updates to the Native App Framework.

- Notebooks now offer more preconfigured runtimes.

Wrap Up

I’d like to mention again that many announcements from June 2025 are not covered in this article because they were already covered in the Summit Recap. Future iterations of “Last Month in Snowflake” will be near comprehensive. We’ll continue to keep you informed of the most critical Snowflake changes!

Jeff Skoldberg is a Sales Engineer at SELECT, helping customers get maximum value out of the SELECT app to reduce their Snowflake spend. Prior to joining SELECT, Jeff was a Data and Analytics Consultant with 15+ years experience in automating insights and using data to control business processes. From a technology standpoint, he specializes in Snowflake + dbt + Tableau. From a business topic standpoint, he has experience in Public Utility, Clinical Trials, Publishing, CPG, and Manufacturing.

Want to hear about our latest Snowflake learnings?Subscribe to get notified.

Get up and running in less than 15 minutes

Connect your Snowflake account and instantly understand your savings potential.