Snowflake Summit 2025: Product Announcement Recap

Jeff SkoldbergThursday, June 05, 2025

Attending Snowflake Summit this year, the largest Data and AI conference in the world, was nothing short of electric. From the moment the keynote kicked off, it was clear: Snowflake is not just iterating; they’re redefining what’s possible across data platforms, AI workloads, and business productivity. With a wave of product launches focused on platform economics, governance, and intelligent automation, this year’s announcements signal a massive leap forward for data-driven organizations.

Let’s summarize all of the announcements at Snowflake Summit 2025!

Platform Economics

Snowflake provided several announcements regarding improved economics. The announcements included cost observability and better economics regarding compute and ingest.

Organizational Usage Views

These views provide a single pane of glass to see all Snowflake spend and consumption across different regions and clouds, offering a comprehensive understanding of resource usage. This is exciting because previously it was challenging to query views such as "query_history" across multiple Snowflake accounts. However, it should be known that most of these Account Views are delayed up to 24 hours and incur an extra charge, as they are considered premium views.

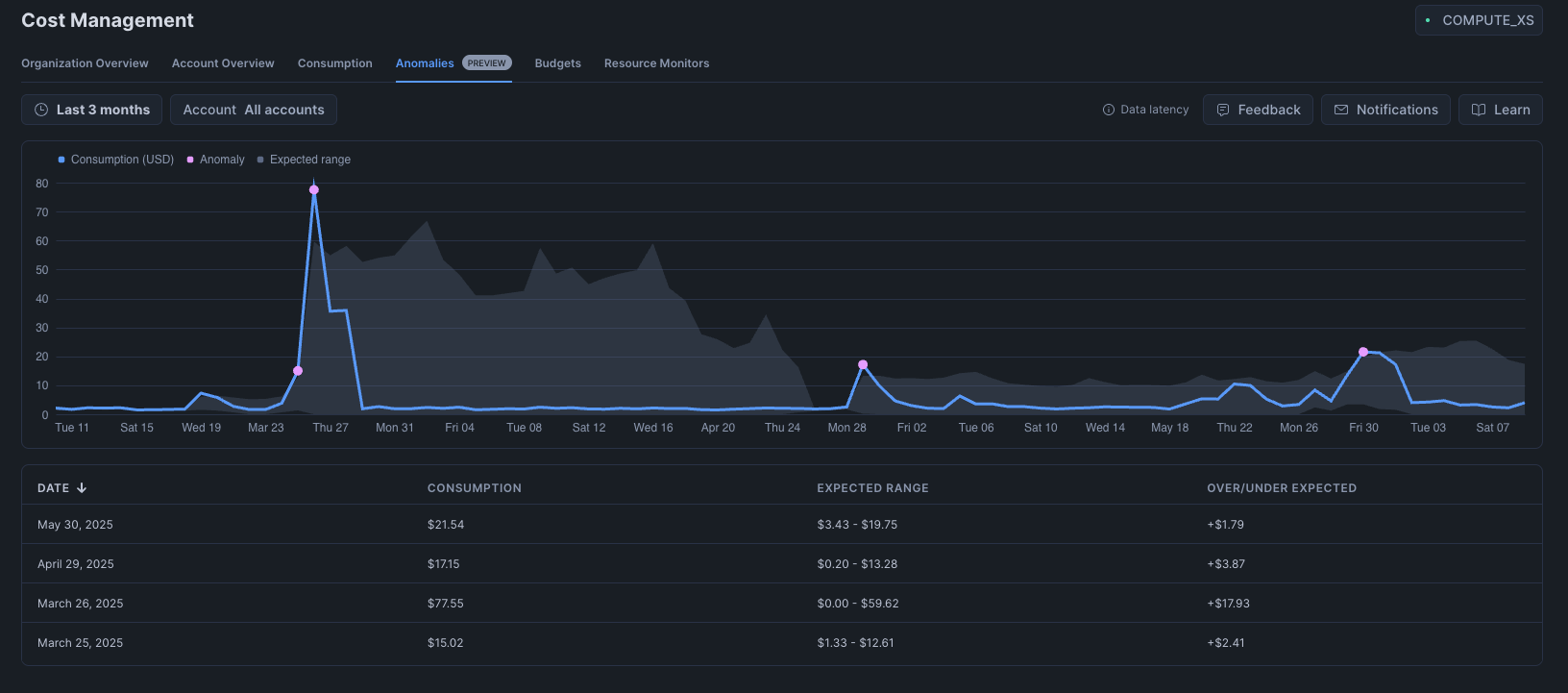

Spend Anomalies

This feature provides notifications when an organization's Snowflake spend is outside of expected ranges, helping to proactively identify and manage unexpected cost increases. We like that you can subscribe any email address to this alert, unlike monitors, which only go to account admins. It is also great that Account and Org level monitors are available from the same page.

Budgets by Query Tags and Object Tags

Until now, Snowflake only allowed you to set budgets by Warehouse. This new feature allows users to classify consumption, enabling more granular chargeback models for resource usage tied to specific queries or database objects. We're excited about this feature as it encourages cost reduction best practices - budgeting and attributing cost by something other than Virtual Warehouse.

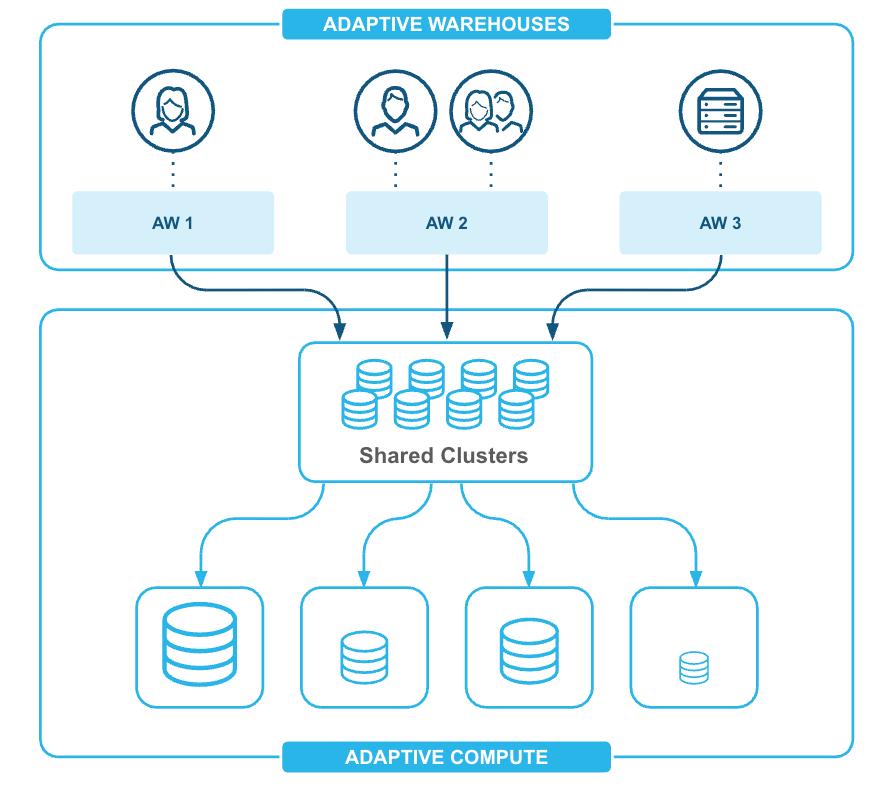

Adaptive Compute

One of the most exciting announcements from Summit, this innovation allows customers to provide policies and intent, and Snowflake automatically determines the necessary compute resources (types, sizes, scaling properties) for easier management, better performance, and optimal utilization. You don’t need to think about what size warehouse to run a job on, Snowflake will decide for you and only bill you for the compute you use with no idle time after your query finishes.

Simplified Snowpipe Ingest Pricing Model

The new pricing model will be based on the volume of data ingested into Snowflake, instead of number of files + compute time. The new model is much easier to understand, forecast, and will provide 50% better economics for data ingestion. Simplified pricing is a win for everyone!

Generation 2 Warehouses

These warehouses offer faster hardware and software optimizations, resulting in 2.1x faster performance on Snowflake benchmarks and 1.9x faster than managed Spark for scanning parquet files in Iceberg tables. We've already heard many customer success stories on Gen2 - looking forward to hearing more!

Data Governance

Snowflake Horizon Catalog (overall platform for governance)

This serves as the central platform for managing and governing data, offering full interoperability with Iceberg-based REST catalogs. It helps you understand what data exists and govern that data. Here’s a list of the updates that fall Horizon Catalog.

Sensitive Data Insights

Enhancements to the Horizon Catalog include automatic classification, tagging, and propagation of tags for sensitive data, improving data privacy and compliance. Auto-propagation of PII tags is much welcomed, as managing PII lineage is one of the most challenging aspects of data governance!

Expectations for Data Quality

This feature allows users to define expected freshness and volume for data, and receive anomaly alerts if these expectations are not met. Alerting on freshness enables more data engineering workloads to be done directly in Snowflake instead of a 3rd party orchestrator. This feature is in Private Preview.

AI Model RBAC (Role-Based Access Control)

This allows organizations to choose which AI models can be used by specific roles, ensuring secure and controlled access to AI capabilities. This is critical as some organizations to want to grant wide open access to AI or they may want to restrict use of more expensive models.

Security Enhancements

Includes deprecating password-only sign-ins, introducing programmatic access tokens, passkeys, authenticator apps, dark web credential monitoring, and IP blocking for enhanced security. Personally I'm most excited about Passkey authentication, as it greatly reduces the friction of MFA. Passkeys integrate seamlessly with your password manager (or OS key system) and you just click a button to log in.

Horizon Co-Pilot

An AI assistant within the Horizon Catalog that allows users to ask questions about unprotected objects or missing tags, streamlining governance tasks. This will enable less technical users to ask questions about their data assets. This feature is in Private Preview.

External Asset Management

Another huge and exciting announcement, Horizon Catalog now extends to discover, expose, and manage assets outside Snowflake. Initial support will cover Power BI and Tableau, dbt, Airflow, SQL Server, Postgres, MySQL, and Databricks. It is unclear to what extent you can discover and manage these assets and how this works in the UI, but we're very eager to learn more here.

Internal Marketplace Enhancements

Improvements include the ability to curate and publish data products (notebooks, ML models, datasets), with new request approval flows, simplified management, and a refreshed user interface. This will streamline end user's ability to request access to datasets. Currently in Public Preview

Data Engineering and Ingestion

Snowflake announced several new Data Engineering features including OpenFlow, Workspaces, and dbt in Snowflake.

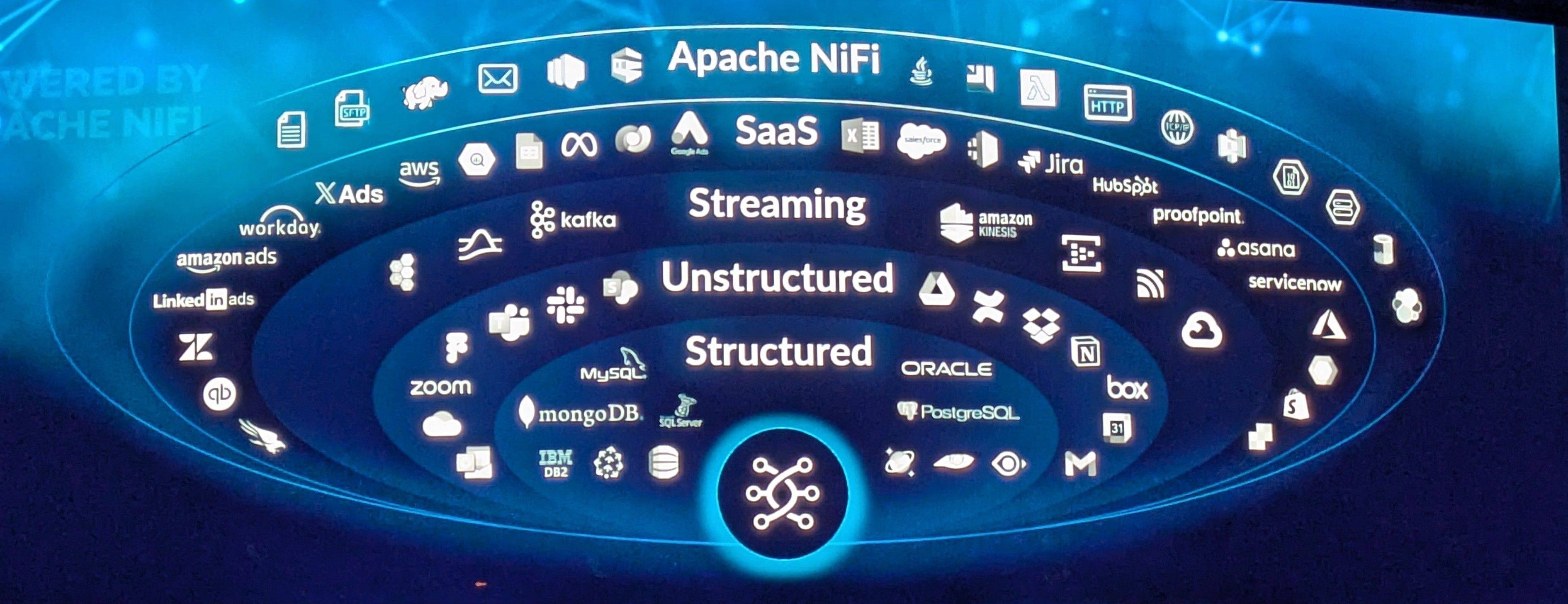

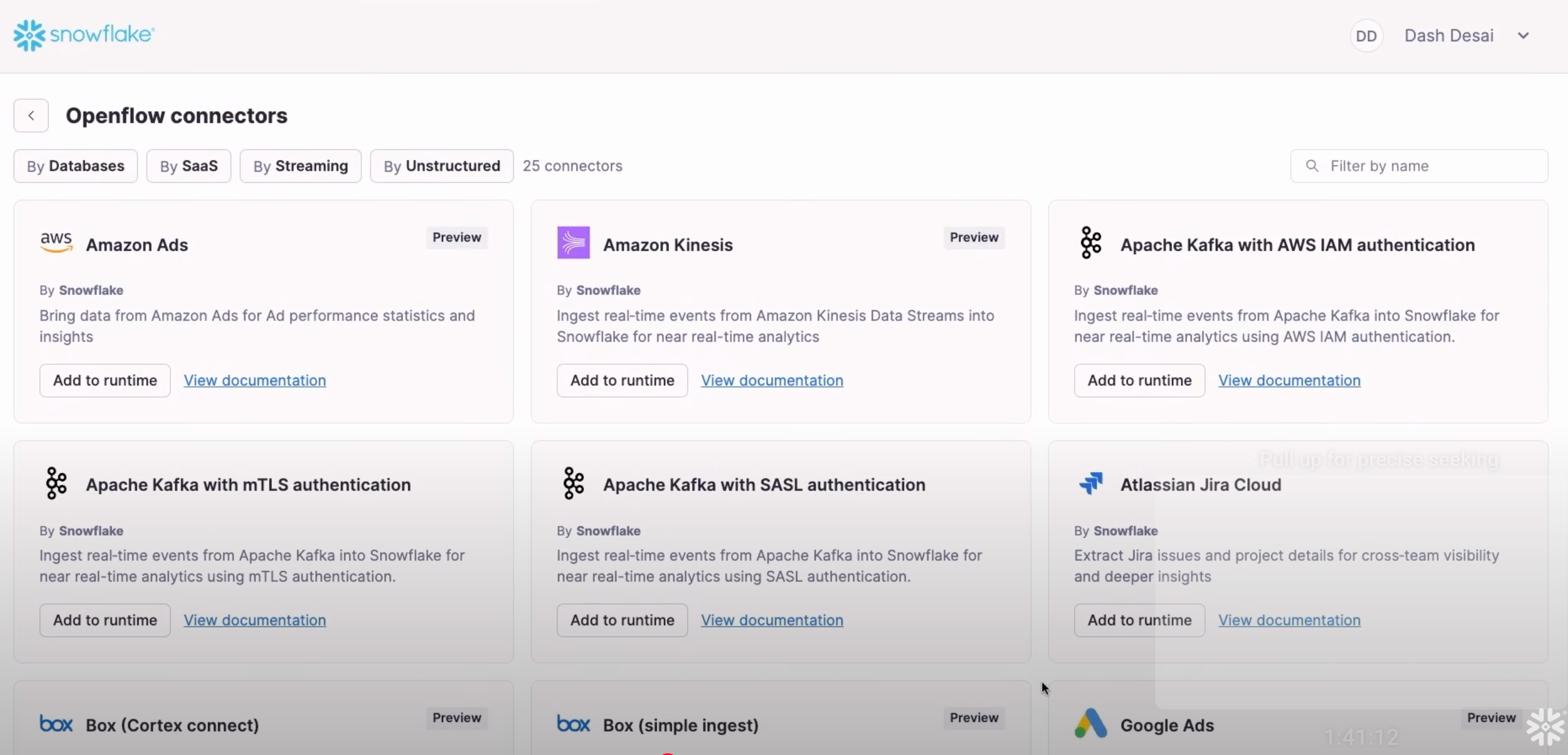

Snowflake OpenFlow

Snowflake OpenFlow is a new managed service for bringing and processing data from a variety of structured and unstructured sources, including SharePoint, Slack, Google Drive and the most popular RDBMSs. It leverages Apache NiFi and supports deployment in Snowflake-managed resources or custom VPCs.

Snowflake has been slowly entering the connector market, but this announcement signifies they are looking to become a primary player in the data ingestion market. If the managed service is truly seamless, this offers a huge win for customers to have one less vendor. However, as of today, this requires you set up infrastructure in AWS, which is probably a deal breaker for customer looking for something fully managed.

Oracle Partnership (Xstream API Integration)

The Xstream API Integration enables seamless, near real-time Change Data Capture (CDC) from Oracle databases into Snowflake. This will be big for customers who use Oracle based ERP systems (Fusion Cloud, JDE, NetSuite, etc.) and want to enable real-time analytics in Snowflake.

Snowpipe Streaming, Newly Revised

The new version of Snowpipe Streaming offers improved SDK access, stateless transformation, pre-clustering at ingest, high throughput (up to 10 GB/s), and makes data queryable within 5-10 seconds. The new SDK is still only available in Java, which limits the target audience compared to python. We would love if Snowflake would create a python SDK (written in Rust, ofc) for Snowpipe Streaming!

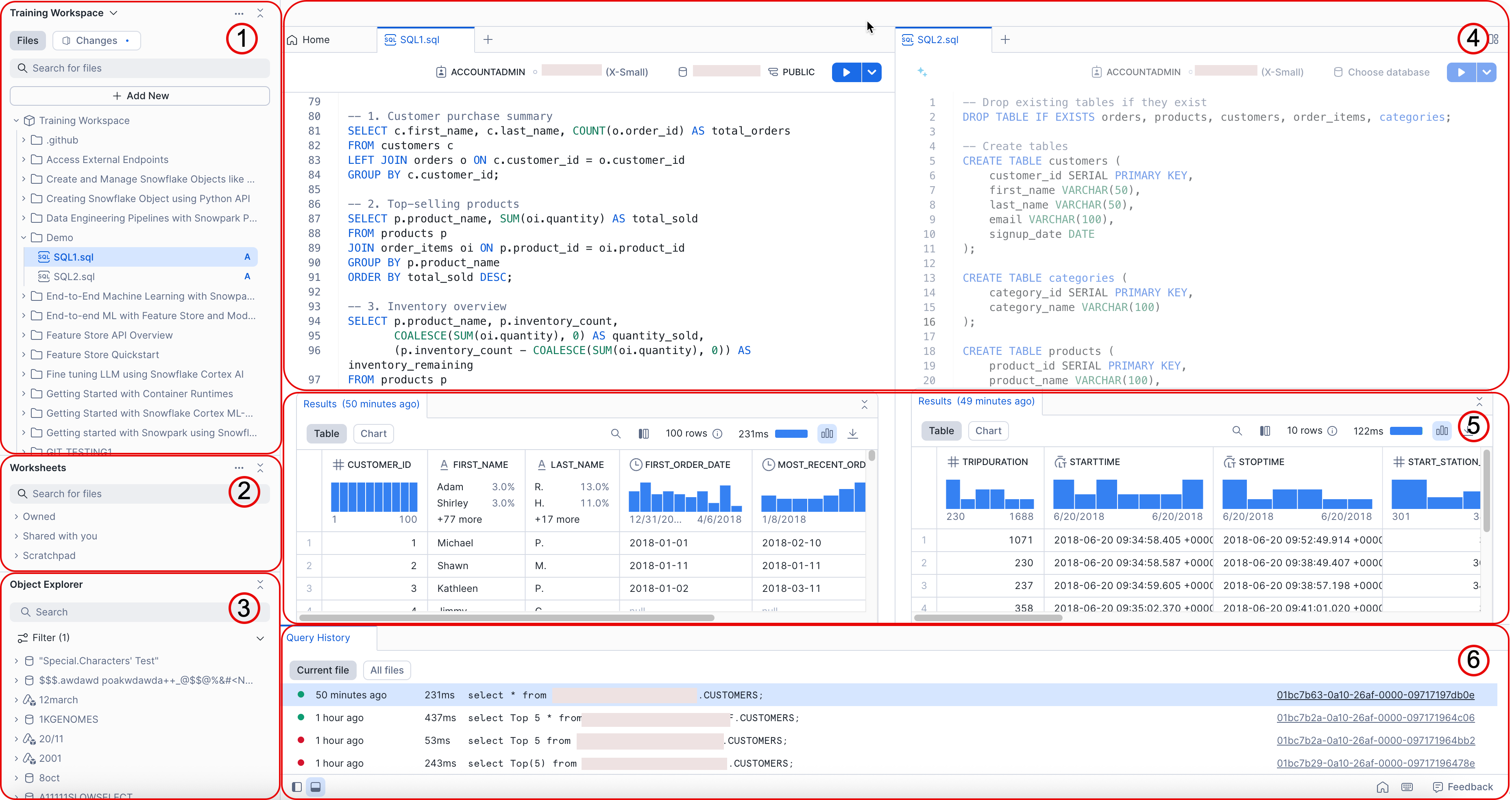

Workspaces in Snowsight

A modern development environment within Snowsight for editing and data curation, supporting worksheets, Streamlit, notebooks, file-based operations, folders, and source code control integration. Workspaces are in Public Preview.

Personally, this is one of my favorite announcements from Snowflake this year as it simplifies the data engineering process. We now have on place to author, version control, and schedule our end-to-end data pipelines. Before Workspaces, using a Git integration with Snowflake was cumbersome. Now we can navigate and edit files directly from a file tree, make changes, view diffs, commit and push, all without leaving Snowsight. I can’t wait to build using this new tool!

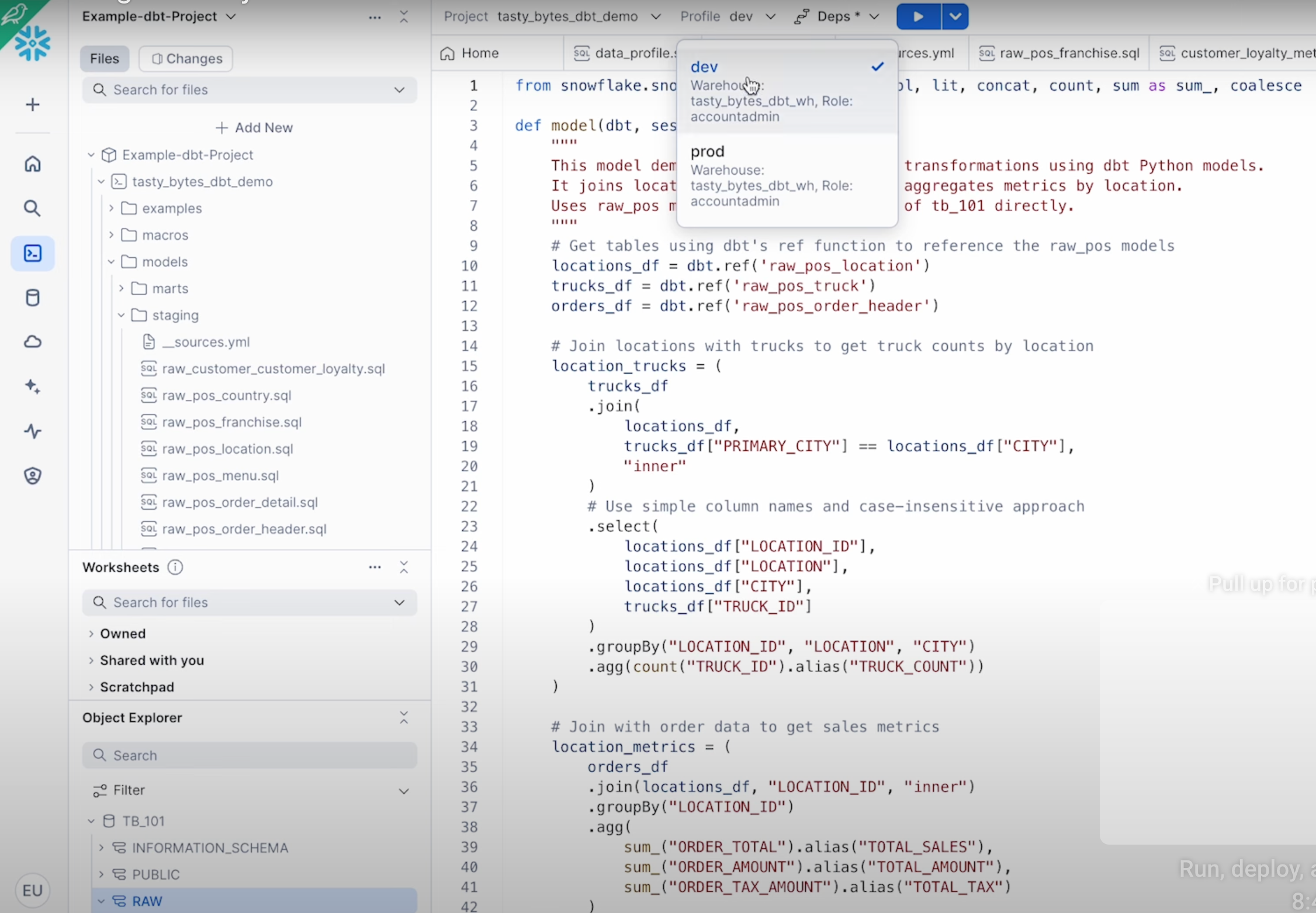

dbt Projects in Snowflake

(Public Preview) An authoring environment allowing users to build, test, and deploy dbt pipelines directly within Snowflake, including observability features and partnership with dbt Labs to bring the dbt Fusion engine.

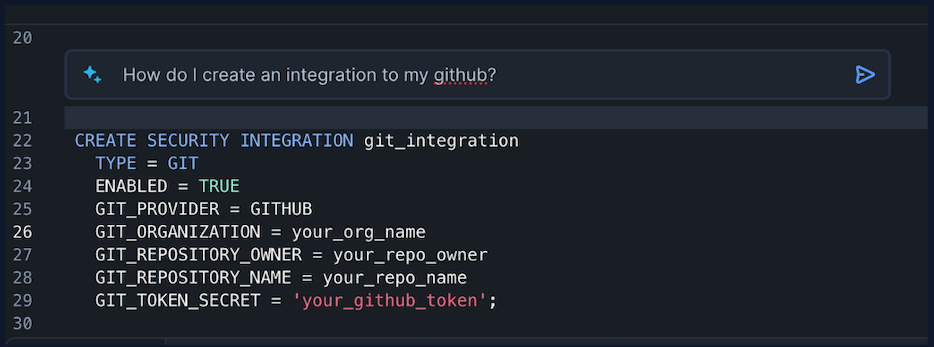

Support for self-hosted git providers

Snowflake now supports self-hosted Git integrations, including GitLab, GitHub Enterprise, Bitbucket, Azure DevOps, and AWS CodeCommit. This lets teams connect private Git repositories to Snowflake for version control, CI/CD, and in-platform development. Users can clone repos, fetch updates, and reference code in SQL, procedures, or apps, all while maintaining governance. This update brings secure and flexible DevOps workflows directly into the Snowflake environment.

Migration Assistant

Migration Assistant is an AI enabled data migration service that guides users through the migration process utilizing Snow Convert output, streamlining complex transitions.

PostgreSQL in Snowflake

Snowflake has responded to customers who have been requesting a transactional data store with the acquisition of Crunchy Data, a hardened PostgreSQL platform. Postgres is the platform of choice for AI agents, so having Postgres in Snowflake will make AI driven app development more convenient.

Snowflake Postgres will deliver a managed PostgreSQL service, offering enterprise capabilities like customer-managed keys and integration with Snowflake's security perimeter, completing its offering with a pure transactional data store.

AI, Agents, and ML

SnowConvert AI

Leverages AI to simplify database migrations, including automated testing and validation of migrated code. SnowConvert AI is a standalone software that you can download now, here. It looks like dialect conversion is the primary feature, leveraging an Abstract Syntax Tree (AST) and a Symbol Table to create a semantic model of the source code, aiding to convert to Snowflake SQL.

Notebooks and Distributed ML APIs

Snowflake notebooks and container runtime are now generally available, along with distributed ML APIs to accelerate model runtime and execution.

Cortex AI SQL

This extends Cortex Functions, offering a collection of functions for entity extraction, aggregation, and filtering across multimodal data (text, image, audio) directly in SQL. They call this the biggest update to SQL since "group by all", and we agree!

- The specific functions are: ai_filter, ai_agg, as_summarize_agg, ai_classify, ai_transcribe, ai_embed, ai_similarity

AI Join allows users to join tables based on natural language prompts (semantic join), with query engine optimization for faster and cheaper execution. For example, joining resumes to job descriptions based on an AI's evaluation of fit.

It is worth noting that Snowflake is pushing unstructured data storage capabilities, and this unstructured data can be queried via AI. A use case is to have AI evaluate a column containing .wav files or PDFs, and ask it to analyze the most common customer complaints found in the recordings or PDF docs.

AI Complete Function

Uses large language models to understand images, transcribe audio, and summarize insights, enabling the storage of text, image, and audio in a single table. This represents a further push into the unstructured data space, which opens up a world of new possibilities for analytics.

AI Aggregate Function

Handles context window limitations for large datasets by using multi-step map-reduce, providing summarized insights across multiple rows of data.

Semantic Views

A new type of view designed to capture and expose business context, such as metrics, dimensions, and definitions, around data for both AI and BI use cases.

Semantic SQL

A richer query language built specifically to query semantic views, leading to better performance and more accurate responses.

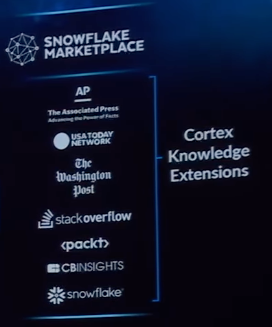

Cortex Knowledge Extensions

These extensions enable organizations to incorporate unstructured data from external sources such as news articles, research papers, and scientific journals into their AI applications, while ensuring intellectual property rights are respected through isolation and clear attribution. These extensions are available through the Marketplace.

Cortex Agents

Cortex Agents, now generally available, enable users to query both structured and unstructured data in Snowflake using natural language. They orchestrate complex workflows, provide reasoning, and interact with various backends and datasets. They automatically route parts of a request to the right tools, like Cortex Analyst or Cortex Search, and refine responses based on context. Agents can also invoke other tools or agents to support agentic applications. Built-in governance ensures secure, compliant access, bringing trustworthy, conversational AI to enterprise data without sacrificing control.

Microsoft Teams Integration

This integration brings Cortex agents to Microsoft Teams, making the power of data available directly where business users typically work.

Agentic Products on Marketplace

The Snowflake Marketplace now supports agentic products and applications from partners, expanding the ecosystem of AI-powered solutions.

Snowflake Intelligence

Snowflake Intelligence is a new agent designed to help business users access insights through natural language, without needing SQL or coding skills. It allows users to ask questions about their data using plain English, and the agent intelligently breaks down the request, routes it through the appropriate tools, and delivers answers with transparency and accuracy. It works across structured data and documents already in Snowflake, and supports follow-up questions for deeper analysis. This is important because it empowers business teams to make faster, data-informed decisions without relying on technical teams, all while maintaining enterprise-grade governance and security. Snowflake Intelligence will soon be in Public Preview. You can check your access at ai.snowflake.com, which will be the new UI for non-technical business users.

Data Science Agent

Data Science Agent falls under the umbrella of Snowflake Intelligence, but is tailored for data scientists instead of non-technical business users. Data Science Agent is built to streamline and scale machine learning workflows within Snowflake. It supports natural language prompts to generate, evaluate, and deploy ML models directly against Snowflake data, reducing the need for external tools or complex pipelines. The agent can suggest relevant features, recommend model types, and even automate training and tuning, accelerating the development process. This lowers the barrier to advanced analytics, speeds up experimentation, and keeps all modeling and data governance inside the Snowflake platform.

In-line Co-Pilot

An inline AI assistant that can be activated with a hotkey (Command+i or Control+i) within the Snowflake environment, providing immediate contextual assistance.

Wrap up

With these advancements, Snowflake is laying the foundation for a future where data platforms are not just scalable, but intelligent, efficient, and deeply integrated with business outcomes. Customers benefit from lower costs, better visibility, stronger governance, and AI-powered tools that meet them where they work. It’s clear that Snowflake isn’t just responding to customer needs, they’re anticipating them. The next era of data and AI is here, and it’s going to be fast, secure, and deeply transformative. We at SELECT are excited to learn about how you are using these new features!

Jeff Skoldberg is a Sales Engineer at SELECT, helping customers get maximum value out of the SELECT app to reduce their Snowflake spend. Prior to joining SELECT, Jeff was a Data and Analytics Consultant with 15+ years experience in automating insights and using data to control business processes. From a technology standpoint, he specializes in Snowflake + dbt + Tableau. From a business topic standpoint, he has experience in Public Utility, Clinical Trials, Publishing, CPG, and Manufacturing.

Want to hear about our latest Snowflake learnings?Subscribe to get notified.

Get up and running in less than 15 minutes

Connect your Snowflake account and instantly understand your savings potential.