Snowflake Cortex Search: Overview, Pricing & Cost Monitoring

Jeff SkoldbergFriday, October 10, 2025

What is Snowflake Cortex Search?

Snowflake's Cortex Search is a fully managed hybrid search service that combines vector, keyword, and semantic search capabilities. It's designed for building RAG (Retrieval Augmented Generation) applications and enterprise search solutions, with Snowflake handling all the embedding, indexing, and serving infrastructure behind the scenes. Unlike traditional keyword search which looks for matching keywords, Cortex Search relies on the semantic meaning of a search pattern against text data. A user can search a customer service database for “billing problems” and get all tickets related to billing issues, regardless if that phrase appeared in the text.

Our customers have been working with Cortex Search extensively, and one of the most common questions I get is: "How do I keep track of what this is actually costing me?"

The challenge with Cortex Search is that the cost structure is more complex than traditional Snowflake services. Unlike a simple warehouse that you spin up and down, Cortex Search has multiple cost components running simultaneously. Without proper monitoring, you might find yourself with an unexpectedly high bill.

Let’s dive in to how Cortex Search works, then review the billing components and monitoring.

How Cortex Search Works

Under the hood, Cortex Search uses a hybrid approach combining vector search (powered by Snowflake's Arctic Embed models), keyword search, and semantic reranking to deliver highly relevant results. When you create a service, Snowflake automatically handles the embedding generation, indexing, and serving infrastructure, transforming your source data to get it ready for low-latency serving.

Here's a practical example of setting up and using Cortex Search for customer support tickets:

As you can see, we’re relying on AI to understand “Billing problems” and return the correct rows.

The SEARCH_PREVIEW function returns JSON results that include a relevance score for each match. By using PARSE_JSON and FLATTEN, you get clean tabular output showing which transcripts are most relevant to your query, along with their metadata and confidence scores. This makes it easy to integrate search results into applications or further SQL analysis.

You can also apply filters based on metadata columns like region or time periods, making it perfect for scenarios where you need both semantic understanding and structured filtering:

How does Cortex Search pricing work?

Cortex Search has 5 pricing components:

- Serving compute: 6.3 credits per GB/month of indexed data (runs continuously whether you're querying or not)

- Embedding tokens: Cost per million token of text in search columns during indexing. This varies by model. See the Snowflake Credit Consumption Table to get the cost of each model. Example pricing as of September 2025:

- snowflake-arctic-embed-l-v2.0: .05 credits per million tokens. This is one of Snowflake’s cheapest AI services processes!

- Warehouse compute: Standard warehouse rates for materializing and refreshing search data and running queries

- Storage: Search indexes incur storage fees at your standard storage rate. (example, $23/TB/Month)

- Cloud services: Usually free (only charged if >10% of daily warehouse usage)

Real-world example: 10 million customer support tickets, averaging 500 tokens each, using an embedding model that costs 0.32 credits per million tokens:

- Total tokens: 10 million rows × 500 tokens = 5 billion tokens

- Embedding cost: 5 billion tokens ÷ 1 million × 0.05 credits = 250 credits for initial indexing

- At $3/credit, this is $750

- Plus ongoing serving: If your index is 50GB, that's 50 × 6.3 = 315 credits/month just to keep it running

- $945 at $3/credit.

Unlike warehouses that you can suspend, Cortex Search serving costs accrue continuously. A 100GB index costs 630 credits monthly (about $1,890 at $3/credit) regardless of query volume. You pay this even if nobody searches.

How to monitor Cortex Search costs & usage

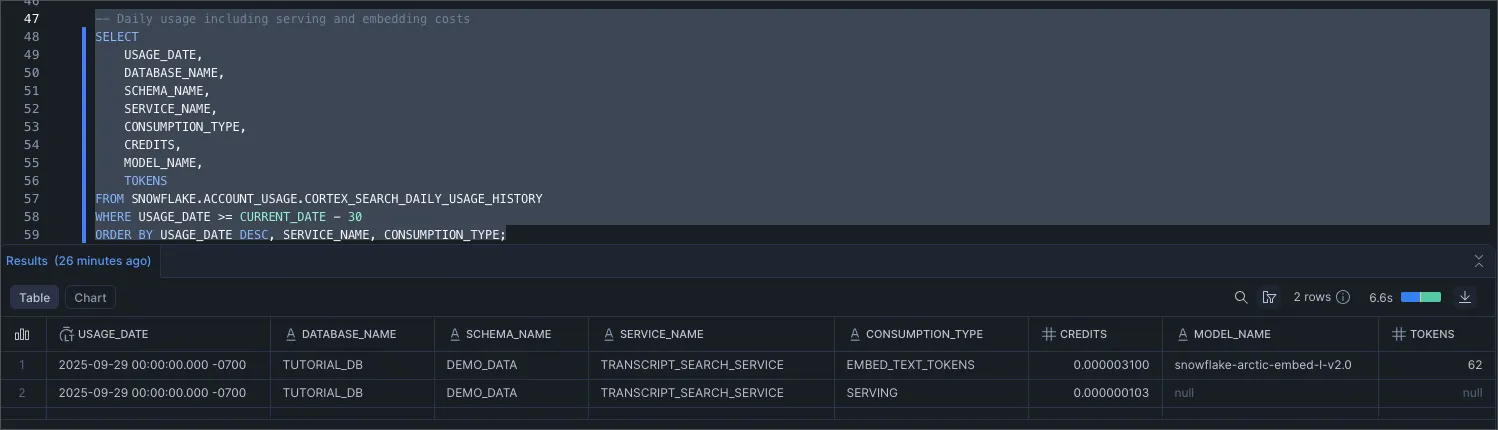

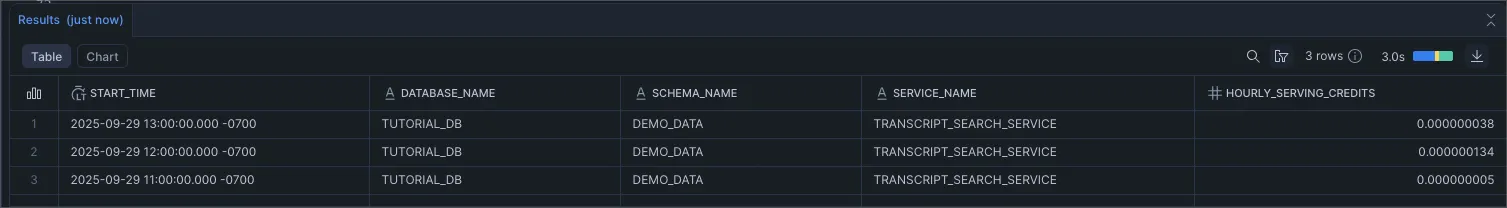

Snowflake provides two dedicated views in the ACCOUNT_USAGE schema for tracking Cortex Search costs. The CORTEX_SEARCH_DAILY_USAGE_HISTORY view breaks down daily costs by consumption type (serving vs embedding), while CORTEX_SEARCH_SERVING_USAGE_HISTORY gives you hourly serving credit details for spotting usage patterns.

Daily example:

You can see a key output is the consuption_type, which is embed_text_tokens or serving.

Hourly example:

You can see here this is an extremely basic rollup of credits by hours.

Best practices and recommendations for using Cortex Search

After helping teams implement Cortex Search cost monitoring, here are my key recommendations:

Set up alerts, not just dashboards

Those monitoring queries above are worthless if nobody runs them. For anything you want to monitor in Snowflake, you can wrap the SQL in a scheduled task with a Notification Integration to create a custom monitor that sends alerts to Slack or Teams. If you want extremely easy to use monitoring functionality, check out monitors in SELECT.

Suspend services during development

Unlike warehouses which can auto-suspend, Cortex Search services rack up serving costs 24/7. During development and testing, suspend your search services when not actively using them. You can resume them in minutes, but those continuous serving charges add up quickly.

Size your indexes thoughtfully

Every column you include in your search service, whether it's searchable or just an attribute, adds to your serving costs. Don't include columns "just in case." Start minimal and add columns only when you need them. The cost difference between a 10GB and 100GB index is substantial.

Monitor embedding model costs separately

Watch the daily usage view's CONSUMPTION_TYPE column to see serving vs embedding costs. Embedding spikes often indicate inefficient refresh patterns or unnecessary full rebuilds. If you see consistent high embedding costs, investigate your data update patterns.

Optimize your Cortex Search services

Search costs depend heavily on how you configure the service. Follow these guidelines:

Set appropriate target lags. If your documents don't change frequently, use longer refresh intervals:

Filter search scope when possible. If agents only need recent documents, add filters:

Use primary keys for incremental updates

If your data changes frequently, define primary keys on your search service. This enables optimized refresh paths that can dramatically reduce both embedding costs and refresh times. Without primary keys, any schema change triggers a full re-embedding of your entire dataset.

Wrap Up

Like any cost monitoring in Snowflake, we rely on the ACCOUNT_USAGE schema to monitor Cortex Search spend. Use our monitoring queries above as a starting point to decide what you need to monitor, then schedule alerts or weekly reports. Watch for serving credit spikes (increased query volume) and embedding costs during data refreshes.

The goal isn't always minimizing costs, it's getting value from your spend. Understanding these patterns helps you optimize both performance and budget.

If you're implementing Cortex Search, reach out. I'd love to hear what monitoring approaches work for your team.

Jeff Skoldberg is a Sales Engineer at SELECT, helping customers get maximum value out of the SELECT app to reduce their Snowflake spend. Prior to joining SELECT, Jeff was a Data and Analytics Consultant with 15+ years experience in automating insights and using data to control business processes. From a technology standpoint, he specializes in Snowflake + dbt + Tableau. From a business topic standpoint, he has experience in Public Utility, Clinical Trials, Publishing, CPG, and Manufacturing.

Want to hear about our latest Snowflake learnings?Subscribe to get notified.

Get up and running in less than 15 minutes

Connect your Snowflake account and instantly understand your savings potential.