Snowflake Cortex AI SQL: Overview, Pricing & Cost Monitoring

Jeff SkoldbergFriday, October 10, 2025

What is Cortex AI SQL?

Snowflake's Cortex AISQL functions bring AI directly into your SQL queries. Instead of exporting data to run it through a model, you just add a column to any select statement and invoke AI right where your data lives. You can analyze text, images, and audio alongside your structured data using functions like AI_COMPLETE, AI_EXTRACT, AI_CLASSIFY, AI_SUMMARIZE, and others, all powered by models from OpenAI, Anthropic, Meta, Mistral, and more.

But what Snowflake customers don’t often realize is token-based pricing is fundamentally different from the warehouse-based pricing you're used to. And those costs can add up fast if you're not careful.

I've watched teams process a "quick test" with Claude Opus and burn through significant credits in an afternoon. They didn't realize output tokens count, they picked an expensive model when a cheaper one would work fine, and they had no monitoring in place to catch it.

In this post, we’ll take a look at how Cortex AISQL is priced and show you exactly how to monitor Cortex AISQL costs using Snowflake's account_usage views.

How does Cortex AI SQL Work?

Using Cortex AISQL functions is straightforward - you just call them directly in your SQL statements like any other function. Here's a simple example that classifies customer feedback as positive, negative, or neutral:

The only prerequisite is your role needs the SNOWFLAKE.CORTEX_USER database role. By default, this is granted to the PUBLIC role, so most users already have access.

That's it. No external API keys, no data movement, no infrastructure setup. The AI models run directly in Snowflake's environment.

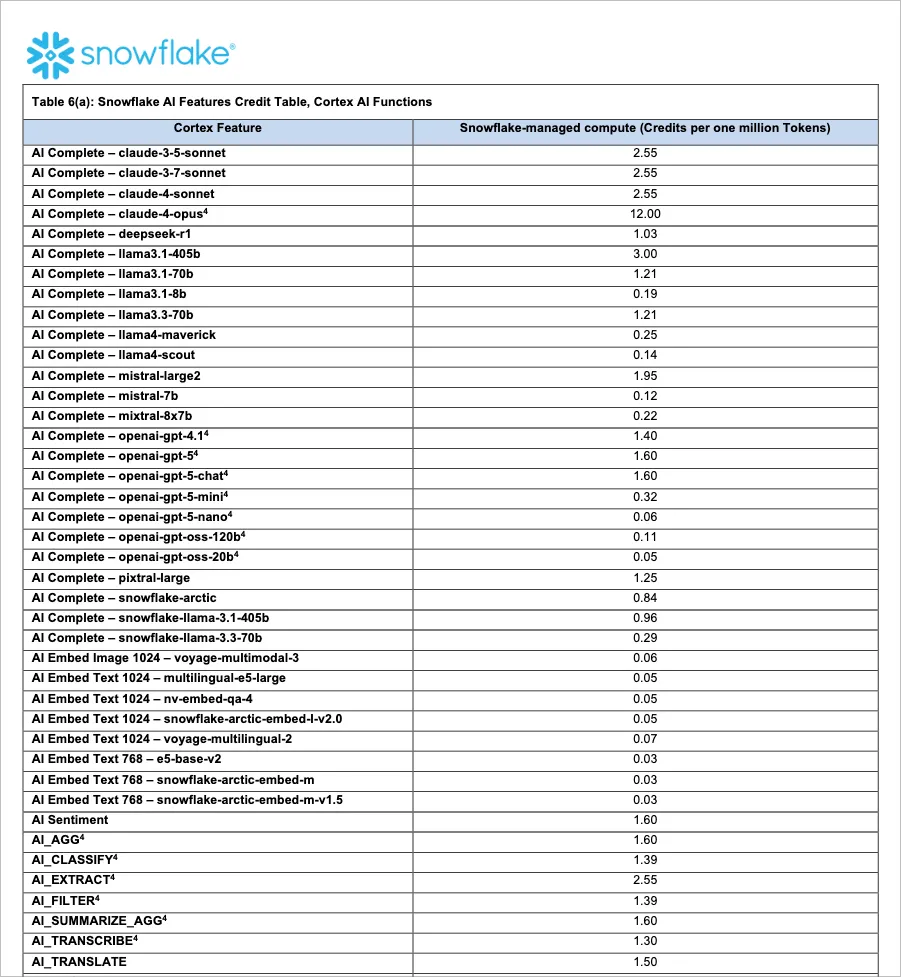

Always refer to the Snowflake Credit Consumption Table

Before we dive deeper on Cortex AISQL costs, I want to mention the details of pricing for all things Snowflake can be found in the Credit Consumption Table, which is continually kept up to date. In the screenshot below you can see the pricing for the various AI models varies drastically. Claude-4-opus is priced at 12 credits per million tokens and some of the smaller models are a fraction of a credit per million tokens.

How does Cortex AI SQL pricing work?

With Cortex functions, you pay per token processed (input + output tokens for generative functions, input-only for embeddings).

Generative functions like AI_COMPLETE, AI_CLASSIFY, and AI_SUMMARIZE charge for both input tokens (the text you send to the model) AND output tokens (the response the model generates). If you send 100 tokens and get back 200 tokens, you're paying for 300 tokens total.

The primary **embedding function** is AI_EMBED, which vectorizes data such as text or images. Here you are only charged for input tokens, since the output is just numbers (not generated text).

Understanding Tokens

- 1 token ≈ 4 characters of text

- Use

COUNT_TOKENSfunction to estimate costs before running expensive operations - Both input AND output tokens count for functions like

AI_COMPLETE,AI_CLASSIFY,AI_SUMMARIZE - Only input tokens count for

AI_EMBEDand extraction functions

Pricing examples

We’ll provide some pricing examples to help you understand the order of magnitude, but Snowflake constantly changes pricing, so always refer to the Credit Consumption Table. These examples use $3 per credit for illustrative purposes.

Small Models:

openai-gpt-5-mini: 0.32 credits = $0.96 per million tokensmistral-7b: 0.12 credits = $0.36 per million tokens- Example:

- Analyzing 20,000 product reviews on

openai-gpt-5-mini - Average review is 100 tokens each: 2M tokens = ~$1.92

- Analyzing 20,000 product reviews on

Medium Models:

llama3.1-70b: 1.21 credits = $3.63 per million tokensopenai-gpt-4.1: 1.40 credits = $4.20- Example: Same 20,000 reviews running

llama3.1-70b= ~$7.26

Large Models (3+ credits per million tokens):

llama-3.1-405b: 3 credits = $9 per million tokensclaude-4-opus: 12 credits = $36 per million tokens- Example: Same 20,000 reviews running

claude-4-opus= ~$72

Cost multipliers of Snowflake Cortex AI SQL

There are a few things that cause the credits to burn faster than desired. Here are a few things to watch out for.

Large outputs multiply costs fast since generative functions charge for both input and output tokens. A verbose 500-word summary costs 5x more than a concise 100-word one. This is controlled by your prompt engineering.

Model choice matters more than most people realize: premium models like Claude Opus cost 37x more than a basic model like GPT 5 mini, and for many classification or sentiment analysis tasks, the cheaper model works just fine.

How to monitor Cortex AI SQL costs and usage

Snowflake provides two dedicated views for monitoring Cortex AISQL function costs, each serving a different need:

CORTEX_FUNCTIONS_USAGE_HISTORY - Aggregated hourly usage data

- Groups token and credit consumption by function, model, and hour

- Does not contain the query ID, so you can’t join this back to a specific query, user, role, etc.

- Perfect for understanding overall usage patterns and trends

- Shows which functions and models are driving the most costs

- Data aggregated in 1-hour increments

CORTEX_FUNCTIONS_QUERY_USAGE_HISTORY - Individual query-level details

- Shows token and credit consumption for each specific query

- Essential for identifying expensive individual queries or operations

- Includes query IDs for deeper investigation

- Helps you find queries that need optimization

Here are some example queries to get you started.

Hourly or Daily cost summary by function and model:

50 most expensive queries. Cost for each query, including the query text, user, and warehouse:

I also found a view called snowflake.account_usage.cortex_aisql_usage_history, although I cannot find docs on it. This view is at the query ID grain and provides usage_time , user_id, and warehouse_id. Maybe this is an undocumented preview feature.

Best practices and recommendations when using Cortex AI SQL

After working with customers on Cortex AISQL costs, here are a few recommendations.

Set up alerts, not just dashboards

Those monitoring queries above are worthless if nobody runs them. For anything you want to monitor in Snowflake, you can wrap the SQL in a scheduled task with a Notification Integration to create a custom monitor that sends alerts to Slack or Teams. If you want extremely easy to use monitoring functionality, check out monitors in SELECT.

Always test token counts first

Before you process 100,000 rows, run COUNT_TOKENS on a sample. Token counts are almost always higher than you expect. A quick test like SELECT AVG(COUNT_TOKENS(your_column)) FROM your_table LIMIT 1000 tells you what you're dealing with.

Start with the smallest model that works

I see this constantly: teams default to GPT-4 or larger Claude models because they're well known and trusted. But for most classification or sentiment analysis tasks, gpt-5-mini works fine and costs 10x less. Test with a premium model on 100 rows, then try the cheaper model. You may be surprised the small model produces similar results.

Prompt the AI to keep output short

When you use AI_COMPLETE or AI_SUMMARIZE, you pay for both the prompt AND the response. If your prompt asks for detailed explanations and you're processing thousands of rows, those output tokens add up fast. Be explicit about keeping responses short.

Cache your results / use incremental updates

If you're classifying the same product descriptions every time someone runs a report, you're wasting money. Materialize the AI results in a table. Yes, this seems obvious, but not everyone is doing this.

Watch which models are actually being used

Run that "Cost by user and warehouse" query regularly. You'll often find someone testing with an expensive model in dev that never got switched to a cheaper option in production. Or worse, you'll find a runaway query processing millions of rows with one of the more expensive Claude models.

Wrap Up

Snowflake provides everything you need to monitor AISQL costs: account usage views with cost details, transparent (but complicated) pricing, and built-in alerting capability. The challenge is shifting your mental model to understand token-based pricing. Most long-time Snowflake users instinctively think about query duration and warehouse size, but now you'll need to focus on token count and model choice as well. Treat your first few weeks with Cortex AISQL as a learning period. Start small, monitor obsessively, and establish patterns before you scale up. The pricing model rewards thoughtful implementation and punishes the "run it on everything and see what happens" approach. I've seen teams panic over AI costs that ran wild, when the models are actually priced reasonably if you use them wisely. If you're processing truly massive datasets daily with AI functions, have a conversation with your Snowflake account team about volume pricing. If you're hitting millions of tokens per day, there could be room to negotiate better rates. Hopefully you now feel confident to use Cortex AISQL functions without getting surprised by the bill! I'd love to hear about your experiences with these features.

Jeff Skoldberg is a Sales Engineer at SELECT, helping customers get maximum value out of the SELECT app to reduce their Snowflake spend. Prior to joining SELECT, Jeff was a Data and Analytics Consultant with 15+ years experience in automating insights and using data to control business processes. From a technology standpoint, he specializes in Snowflake + dbt + Tableau. From a business topic standpoint, he has experience in Public Utility, Clinical Trials, Publishing, CPG, and Manufacturing.

Want to hear about our latest Snowflake learnings?Subscribe to get notified.

Get up and running in less than 15 minutes

Connect your Snowflake account and instantly understand your savings potential.